by Mark | May 2, 2023 | Azure, Azure Blobs, Azure Disks, Azure FIles, Azure Queues, Azure Tables, Azure VM Deployment, Cloud Computing

Introduction to Azure Subscriptions

Azure Subscriptions are a key component of Microsoft Azure’s cloud platform, as they form the foundation for managing and organizing resources in the Azure environment. In essence, an Azure Subscription is a logical container for resources that are deployed within an Azure account. Each subscription acts as both a billing and access control boundary, ensuring that resources are accurately accounted for and that users have the appropriate permissions to interact with them. This article will delve into the different types of Azure Subscriptions, their benefits, and how they fit into the broader Azure hierarchy. Additionally, we will explore best practices for managing multiple subscriptions to optimize cloud operations and maximize the return on your Azure investment.

Types of Azure Subscriptions

There are several types of Azure Subscriptions available, catering to the diverse needs of individuals, small businesses, and large enterprises. Let’s explore some of the most common subscription types:

Free Trial

The Free Trial subscription is designed for users who want to explore and test Azure services before committing to a paid plan. It offers a limited amount of resources and a $200 credit to use within the first 30 days.

Pay-as-you-go

This subscription model is designed for individuals or organizations that prefer to pay for resources as they consume them. It offers flexibility in terms of resource allocation and billing, allowing users to scale up or down based on their needs without any long-term commitment. Learn more about Azure’s pay-as-you-go pricing.

Enterprise Agreement

Enterprise Agreements are suitable for large organizations with extensive cloud requirements. They offer volume discounts, flexible payment options, and an extended range of support and management features. EA customers also benefit from a dedicated account team and additional resources to help optimize their cloud usage. To know more, visit Microsoft’s Enterprise Agreement page.

Cloud Solution Provider (CSP)

The CSP program enables Microsoft partners to resell Azure services to their customers. This subscription type is ideal for small and medium-sized businesses looking to leverage the expertise of a Microsoft partner to manage their cloud infrastructure. Learn more about the Microsoft Customer Agreement.

Azure Subscription Benefits

Azure subscriptions provide a number of benefits to users who want to use Microsoft’s cloud computing platform. Some of the key benefits of Azure subscriptions include:

Access to a wide range of services: Azure offers a comprehensive range of services that enable users to build, deploy, and manage applications and infrastructure on the cloud. With an Azure subscription, users can access these services and choose the ones that best meet their needs.

Scalability: Azure offers scalable infrastructure that allows users to quickly and easily scale up or down their resources as needed. This can help businesses and organizations to save money by only paying for the resources they need at any given time.

Cost-effective pricing: Azure offers a range of pricing options that can help users to save money on their cloud computing costs. For example, users can choose to pay only for the resources they use, or they can opt for a flat-rate pricing plan that provides predictable costs.

Security: Azure is designed with security in mind and offers a range of tools and features to help users secure their applications and data on the cloud. This includes features such as identity and access management, encryption, and threat detection.

Integration with other Microsoft services: Azure integrates seamlessly with other Microsoft services, such as Office 365 and Dynamics 365. This can help users to streamline their workflows and improve productivity.

Support: Azure offers a range of support options, including community support, technical support, and customer support. This can help users to get the help they need when they need it, whether they are experienced developers or new to cloud computing.

In addition to the benefits mentioned above, Azure subscriptions also offer several features that can help users with resource organization, access control, billing management, and policy enforcement. Here is a brief overview of these features:

Resource Organization: With Azure subscriptions, users can organize their cloud resources using groups, tags, and other metadata. This makes it easy to manage and monitor resources across multiple subscriptions, regions, and departments.

Access Control: Azure subscriptions provide robust access control features that allow users to control who can access their resources and what they can do with them. This includes role-based access control (RBAC), which enables users to assign roles to users or groups and limit their permissions accordingly.

Billing Management: Azure subscriptions offer a range of billing and cost management tools that enable users to track their cloud spending and optimize their costs. This includes features such as cost analysis, budget alerts, and usage reports.

Policy Enforcement: Azure subscriptions enable users to enforce policies that govern resource usage and compliance. This includes Azure Policy, which allows users to define and enforce policies across their cloud environment, and Azure Security Center, which provides security recommendations and alerts based on best practices and compliance requirements.

Overall, Azure subscriptions provide a powerful platform for building and managing cloud applications and infrastructure. With its wide range of services, scalability, cost-effectiveness, security, and support, Azure subscriptions can help users to achieve their cloud computing goals with ease and efficiency.

Subscription Limitations and Quotas

Azure Subscriptions have certain limitations and quotas on the number of resources and services that can be used. These limits are in place to prevent abuse and to ensure fair usage across all users. However, if your organization requires higher limits, you can request an increase through the Azure portal.

Subscription Cost Management

Effectively managing costs in Azure is essential to avoid unexpected charges and to optimize resource usage. Here are some tools and strategies to help you manage costs:

Azure Cost Management Tools

Azure Cost Management Tools allow you to monitor, analyze, and optimize your Azure spending. These tools provide insights into your resource usage, helping you identify areas for cost savings and optimization.

Budgets and Alerts

Creating budgets and setting up alerts can help you stay on top of your Azure spending. Azure Budgets allow you to set spending limits for your resources, while Azure Alerts notify you when you’re nearing or exceeding your budget.

Azure Subscription Limits

Resource Limits: Azure subscriptions have limits on the number of resources that users can deploy. This includes limits on the number of virtual machines, storage accounts, and other resources that can be created within a subscription. These limits can vary depending on the subscription tier and the region where the resources are deployed.

Scale Limits: While Azure is designed to be highly scalable, there are still limits on the amount of scaling that can be done for certain resources. For example, there are limits on the number of virtual machines that can be added to a virtual machine scale set or the number of instances that can be added to an Azure Kubernetes Service (AKS) cluster.

Performance Limits: Azure subscriptions have limits on the amount of performance that can be achieved for certain resources. For example, there are limits on the amount of IOPS (Input/Output Operations Per Second) that can be achieved for a storage account or the maximum throughput that can be achieved for a virtual network gateway.

API Limits: Azure subscriptions have limits on the number of API calls that can be made to certain services. These limits are designed to prevent overloading the services and to ensure fair usage by all users.

Cost Limits: While Azure offers cost-effective pricing options, users should be aware of the potential for unexpected costs. Azure subscriptions have limits on the amount of spending that can be done within a given time period, and users should monitor their usage carefully to avoid exceeding these limits.

| Resource Type |

Limit |

Virtual Machines

|

Up to 10,000 per subscription |

Storage Accounts

|

Up to 250 per subscription |

Virtual Network

|

Up to 500 per subscription |

Load Balancers

|

Up to 200 per subscription |

Public IP Addresses

|

Up to 10,000 per subscription |

Virtual Network Gateway

|

Up to 1 per subscription |

ExpressRoute Circuits

|

Up to 10 per subscription |

AKS Cluster Nodes

|

Up to 5,000 per subscription |

App Service Plans

|

Up to 100 per subscription |

SQL Databases

|

Up to 30,000 per subscription |

Please note that these limits are subject to change and may vary depending on the specific subscription tier and region where the resources are deployed. Users should consult the Azure documentation for the most up-to-date information on resource limits.

These limits can be increased by contacting Azure support, but it is important to be aware of these constraints when planning your Azure infrastructure.

Migrating Resources Between Subscriptions

In some cases, you may need to migrate resources between Azure Subscriptions. This could be due to organizational changes or to consolidate resources for better management. Azure provides tools and documentation to help you plan and execute these migrations with minimal disruption to your services.

Azure Subscription vs. Azure Management Groups

Azure Subscriptions and Azure Management Groups both serve as organizational units for managing resources in Azure. While Azure Subscriptions act as billing and access control boundaries, Azure Management Groups provide a higher level of organization, allowing you to manage multiple subscriptions within your organization.

Azure Management Groups can be used to apply policies, assign access permissions, and organize subscriptions hierarchically. This can help you manage resources more effectively across multiple subscriptions.

Managing Multiple Azure Subscriptions

In organizations with multiple Azure Subscriptions, it’s essential to manage them effectively to ensure consistency, compliance, and cost control across your cloud infrastructure. Here are some strategies for managing multiple Azure Subscriptions:

Use Azure Management Groups

Azure Management Groups help you organize and manage multiple subscriptions hierarchically. By creating a management group hierarchy, you can apply policies, assign access permissions, and manage resources consistently across all subscriptions within the hierarchy.

Implement Azure Policies

Azure Policies allow you to enforce compliance with your organization’s requirements and best practices across all subscriptions. By defining and applying policies at the management group level, you can ensure consistency and compliance across your entire cloud infrastructure.

Consolidate Billing

Consolidate billing across multiple subscriptions by using a single billing account or Enterprise Agreement (EA). This can simplify your billing process and provide a unified view of your organization’s cloud spending.

Implement Cross-Subscription Resource Management

Leverage Azure services like Azure Lighthouse to manage resources across multiple subscriptions. This enables you to perform cross-subscription management tasks, such as monitoring, security, and automation, from a single interface.

Monitor and Optimize Resource Usage Across Subscriptions

Regularly monitor your resource usage across all subscriptions to identify areas for cost savings and optimization. You can use Azure Cost Management tools and reports to gain insights into your spending and resource usage across multiple subscriptions.

Understanding Azure Subscription Hierarchies

Azure Subscription hierarchies play a crucial role in organizing and managing resources across an organization. At the top level, there is the Azure account, which is associated with a unique email address and can have multiple subscriptions. Each subscription can contain multiple resource groups, which are logical containers for resources that are deployed within a subscription. Resource groups help to organize and manage resources based on their lifecycle and their relationship to each other.

The Azure hierarchy is a way of organizing resources within an Azure subscription. It consists of four levels:

Management Group: The highest level of the hierarchy is the management group, which is used to manage policies and access across multiple subscriptions. A management group can contain subscriptions, other management groups, and Azure Active Directory (AD) groups.

Subscription: The next level down is the subscription, which is the basic unit of management in Azure. Each subscription has its own billing, policies, and access controls. Resources are created and managed within a subscription.

Resource Group: Within each subscription, resources can be organized into resource groups. A resource group is a logical container for resources that share common attributes, such as region, lifecycle, or security. Resources in a resource group can be managed collectively using policies, access controls, and tags.

Resource: The lowest level of the hierarchy is the resource itself. A resource is a manageable item, such as a virtual machine, storage account, or network interface. Resources can be created, updated, and deleted within a subscription and can be organized into resource groups.

The Azure hierarchy provides a flexible and scalable way to manage resources within an Azure environment. By organizing resources into logical containers, users can apply policies and access controls at a granular level, while still maintaining a high-level view of the entire Azure landscape. This can help to improve security, compliance, and efficiency when managing cloud resources.

Role-Based Access Control in Azure Subscriptions

Role-Based Access Control (RBAC) is a critical aspect of managing Azure Subscriptions. RBAC enables administrators to grant granular permissions to users, groups, or applications, ensuring that they have the necessary access to resources within a subscription. RBAC roles can be assigned at various levels, including the subscription level, the resource group level, or the individual resource level. This allows organizations to implement a least-privilege model, granting users only the access they need to perform their tasks.

FAQs

What is an Azure Subscription?

An Azure Subscription is a logical container for resources that are deployed within an Azure account. It acts as both a billing and access control boundary.

What are the different types of Azure Subscriptions?

The main types of Azure Subscriptions are Pay-As-You-Go, Enterprise Agreements, and Cloud Solution Provider.

What is the difference between Azure Subscriptions and Azure Resource Groups?

Azure Subscriptions act as a billing and access control boundary, while Azure Resource Groups are logical containers for resources based on their lifecycle and relationship to each other.

How can I manage multiple Azure Subscriptions?

Use Azure Management Groups, implement Azure Policies, consolidate billing, implement cross-subscription resource management, and monitor and optimize resource usage across subscriptions.

What are the limits associated with Azure Subscriptions?

Some notable limits include a maximum of 50 virtual networks, 250 storage accounts, and 10,000 virtual machines per subscription. These limits can

be increased by contacting Azure support, but it is important to be aware of these constraints when planning your Azure infrastructure.

What is the role of Role-Based Access Control (RBAC) in Azure Subscriptions?

RBAC is a critical aspect of managing Azure Subscriptions as it enables administrators to grant granular permissions to users, groups, or applications, ensuring that they have the necessary access to resources within a subscription.

How do Azure Management Groups help in managing multiple Azure Subscriptions?

Azure Management Groups provide a way to organize subscriptions into a hierarchy, making it easier to manage access control, policies, and compliance across multiple subscriptions.

How can I monitor and optimize resource usage across multiple Azure Subscriptions?

Use Azure Cost Management and Azure Monitor to track resource usage and optimize costs across all subscriptions in the organization.

What are some best practices for managing multiple Azure Subscriptions?

Some best practices include using Azure Management Groups, implementing Azure Policies, consolidating billing, implementing cross-subscription resource management, and monitoring and optimizing resource usage across subscriptions.

Can I increase the limits associated with my Azure Subscription?

Yes, you can request an increase in limits by contacting Azure support. However, it is important to plan your Azure infrastructure with the existing limits in mind and consider the impact of increased limits on your organization’s overall cloud strategy.

Conclusion

Understanding and effectively managing Azure Subscriptions is crucial for organizations using the Azure cloud platform. By implementing best practices for subscription management, organizing resources, and applying consistent policies across your infrastructure, you can optimize your cloud operations and make the most of your Azure investment. Regularly monitoring and optimizing resource usage across all subscriptions will ensure you are using Azure services efficiently and cost-effectively.

by Mark | Apr 20, 2023 | Azure Blobs, Azure Disks, Azure FIles, Cloud Storage, Storage Accounts

Understanding Blob Storage and Blob-Hunting

What is Blob Storage?

Blob storage is a cloud-based service offered by various cloud providers, designed to store vast amounts of unstructured data such as images, videos, documents, and other types of files. It is highly scalable, cost-effective, and durable, making it an ideal choice for organizations that need to store and manage large data sets for applications like websites, mobile apps, and data analytics. With the increasing reliance on cloud storage solutions, data security and accessibility have become a significant concern. Organizations must prioritize protecting sensitive data from unauthorized access and potential threats to maintain the integrity and security of their storage accounts.

What is Blob-Hunting?

Blob-hunting refers to the unauthorized access and exploitation of blob storage accounts by cybercriminals. These malicious actors use various techniques, including scanning for public-facing storage accounts, exploiting vulnerabilities, and leveraging weak or compromised credentials, to gain unauthorized access to poorly protected storage accounts. Once they have gained access, they may steal sensitive data, alter files, hold the data for ransom, or use their unauthorized access to launch further attacks on the storage account’s associated services or applications. Given the potential risks and damage associated with blob-hunting, it is crucial to protect your storage account to maintain the security and integrity of your data and ensure the continuity of your operations.

Strategies for Protecting Your Storage Account

Implement Strong Authentication

One of the most effective ways to secure your storage account is by implementing strong authentication mechanisms. This includes using multi-factor authentication (MFA), which requires users to provide two or more pieces of evidence (factors) to prove their identity. These factors may include something they know (password), something they have (security token), or something they are (biometrics). By requiring multiple authentication factors, MFA significantly reduces the risk of unauthorized access due to stolen, weak, or compromised passwords.

Additionally, it is essential to choose strong, unique passwords for your storage account and avoid using the same password for multiple accounts. A strong password should be at least 12 characters long and include upper and lower case letters, numbers, and special symbols. Regularly updating your passwords and ensuring that they remain unique can further enhance the security of your storage account. Consider using a password manager to help you securely manage and store your passwords, ensuring that you can easily generate and use strong, unique passwords for all your accounts without having to memorize them.

When it comes to protecting sensitive data in your storage account, it is also important to consider the use of hardware security modules (HSMs) or other secure key management solutions. These technologies can help you securely store and manage cryptographic keys, providing an additional layer of protection against unauthorized access and data breaches.

Limit Access and Assign Appropriate Permissions

Another essential aspect of securing your storage account is limiting access and assigning appropriate permissions to users. This can be achieved through role-based access control (RBAC), which allows you to assign specific permissions to users based on their role in your organization. By using RBAC, you can minimize the risk of unauthorized access by granting users the least privilege necessary to perform their tasks. This means that users only have the access they need to complete their job responsibilities and nothing more.

Regularly reviewing and updating user roles and permissions is essential to ensure they align with their current responsibilities and that no user has excessive access to your storage account. It is also crucial to remove access for users who no longer require it, such as employees who have left the organization or changed roles. Implementing a regular access review process can help you identify and address potential security risks associated with excessive or outdated access permissions.

Furthermore, creating access policies with limited duration and scope can help prevent excessive access to your storage account. When granting temporary access, make sure to set an expiration date to ensure that access is automatically revoked when no longer needed. Additionally, consider implementing network restrictions and firewall rules to limit access to your storage account based on specific IP addresses or ranges. This can help reduce the attack surface and protect your storage account from unauthorized access attempts originating from unknown or untrusted networks.

Encrypt Data at Rest and in Transit

Data encryption is a critical aspect of securing your storage account. Ensuring that your data is encrypted both at rest and in transit makes it more difficult for cybercriminals to access and exploit your sensitive information, even if they manage to gain unauthorized access to your storage account.

Data at rest should be encrypted using server-side encryption, which involves encrypting the data before it is stored on the cloud provider’s servers. This can be achieved using encryption keys managed by the cloud provider or your own encryption keys, depending on your organization’s security requirements and compliance obligations. Implementing client-side encryption, where data is encrypted on the client-side before being uploaded to the storage account, can provide an additional layer of protection, especially for highly sensitive data.

Data in transit, on the other hand, should be encrypted using Secure Sockets Layer (SSL) or Transport Layer Security (TLS), which secures the data as it travels between the client and the server over a network connection. Ensuring that all communication between your applications, services, and storage account is encrypted can help protect your data from eavesdropping, man-in-the-middle attacks, and other potential threats associated with data transmission.

By implementing robust encryption practices, you significantly reduce the risk of unauthorized access to your sensitive data, ensuring that your storage account remains secure and compliant with industry standards and regulations.

Regularly Monitor and Audit Activity

Monitoring and auditing activity in your storage account is essential for detecting and responding to potential security threats. Setting up logging and enabling monitoring tools allows you to track user access, file changes, and other activities within your storage account, providing you with valuable insights into the security and usage of your data.

Regularly reviewing the logs helps you identify any suspicious activity or potential security vulnerabilities, enabling you to take immediate action to mitigate potential risks and maintain a secure storage environment. Additionally, monitoring and auditing activity can also help you optimize your storage account’s performance and cost-effectiveness by identifying unused resources, inefficient data retrieval patterns, and opportunities for data lifecycle management.

Consider integrating your storage account monitoring with a security information and event management (SIEM) system or other centralized logging and monitoring solutions. This can help you correlate events and activities across your entire organization, providing you with a comprehensive view of your security posture and enabling you to detect and respond to potential threats more effectively.

Enable Versioning and Soft Delete

Implementing versioning and soft delete features can help protect your storage account against accidental deletions and modifications, as well as malicious attacks. By enabling versioning, you can maintain multiple versions of your blobs, allowing you to recover previous versions in case of accidental overwrites or deletions. This can be particularly useful for organizations that frequently update their data or collaborate on shared files, ensuring that no critical information is lost due to human error or technical issues.

Soft delete, on the other hand, retains deleted blobs for a specified period, giving you the opportunity to recover them if necessary. This feature can be invaluable in scenarios where data is accidentally deleted or maliciously removed by an attacker, providing you with a safety net to restore your data and maintain the continuity of your operations.

It is important to regularly review and adjust your versioning and soft delete settings to ensure that they align with your organization’s data retention and recovery requirements. This includes setting appropriate retention periods for soft-deleted data and ensuring that versioning is enabled for all critical data sets in your storage account. Additionally, consider implementing a process for regularly reviewing and purging outdated or unnecessary versions and soft-deleted blobs to optimize storage costs and maintain a clean storage environment.

Perform Regular Backups and Disaster Recovery Planning

Having a comprehensive backup strategy and disaster recovery plan in place is essential for protecting your storage account and ensuring the continuity of your operations in case of a security breach, accidental deletion, or other data loss events. Developing a backup strategy involves regularly creating incremental and full backups of your storage account, ensuring that you have multiple copies of your data stored in different locations. This helps you recover your data quickly and effectively in case of an incident, minimizing downtime and potential data loss.

Moreover, regularly testing your disaster recovery plan is critical to ensure its effectiveness and make necessary adjustments as needed. This includes simulating data loss scenarios, verifying the integrity of your backups, and reviewing your recovery procedures to ensure that they are up-to-date and aligned with your organization’s current needs and requirements.

In addition to creating and maintaining backups, implementing cross-region replication or geo-redundant storage can further enhance your storage account’s resilience against data loss events. By replicating your data across multiple geographically distributed regions, you can ensure that your storage account remains accessible and functional even in the event of a regional outage or disaster, allowing you to maintain the continuity of your operations and meet your organization’s recovery objectives.

Implementing Security Best Practices

In addition to the specific strategies mentioned above, implementing general security best practices for your storage account can further enhance its security and resilience against potential threats. These best practices may include:

- Regularly updating software and applying security patches to address known vulnerabilities

- Training your team on security awareness and best practices

- Performing vulnerability assessments and penetration testing to identify and address potential security weaknesses

- Implementing a strong security policy and incident response plan to guide your organization’s response to security incidents and minimize potential damage

- Segmenting your network and implementing network security controls, such as firewalls and intrusion detection/prevention systems, to protect your storage account and associated services from potential threats

- Regularly reviewing and updating your storage account configurations and security settings to ensure they align with industry best practices and your organization’s security requirements

- Implementing a data classification and handling policy to ensure that sensitive data is appropriately protected and managed throughout its lifecycle

- Ensuring that all third-party vendors and service providers that have access to your storage account adhere to your organization’s security requirements and best practices.

Conclusion

Protecting your storage account against blob-hunting is crucial for maintaining the security and integrity of your data and ensuring the continuity of your operations. By implementing strong authentication, limiting access, encrypting data, monitoring activity, and following security best practices, you can significantly reduce the risk of unauthorized access and data breaches. Being proactive in securing your storage account and safeguarding your valuable data from potential threats is essential in today’s increasingly interconnected and digital world.

by Mark | Apr 13, 2023 | Azure, Azure Blobs, Azure FIles, Storage Accounts

Microsoft’s Azure Data Box is a data transfer solution designed to simplify and streamline the process of moving large amounts of data to Azure cloud storage. With the continuous growth of data volumes, businesses are seeking efficient and cost-effective ways to transfer and store data in the cloud. This comprehensive article provides an in-depth analysis of the key factors impacting costs, best practices, and a step-by-step guide to using Azure Data Box. By discussing tradeoffs and challenges associated with various approaches, this article aims to inform and engage readers who are considering transferring data to Azure.

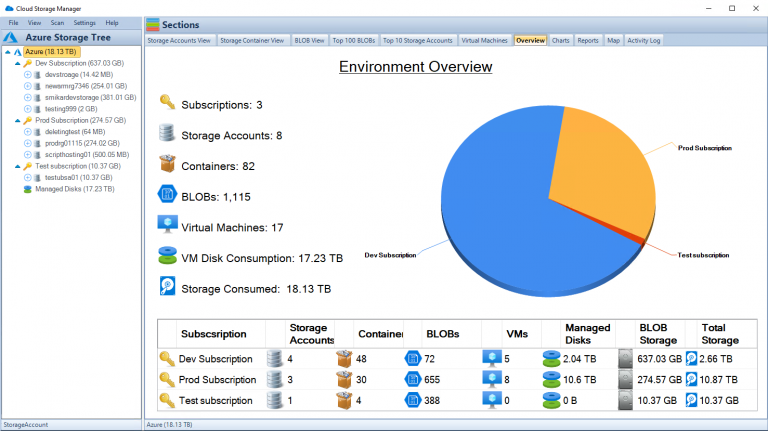

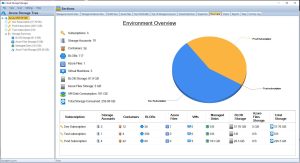

This article also highlights the importance of using tools such as the free Azure Blob Storage Cost Estimator and the Cloud Storage Manager software. These tools help users understand storage costs and options, provide insights into Azure Blob and File storage consumption, and generate reports on storage usage and growth trends to save money on Azure Storage.

Azure Data Box – An Overview

Azure Data Box is a family of physical devices that enable secure and efficient data transfer to Azure cloud storage. The Data Box family includes several products, each designed for different data transfer requirements:

Azure Data Box Disk

Designed for small to medium-sized data transfers, the Data Box Disk is a portable SSD device with an 8TB capacity. It supports data transfer rates of up to 450MB/s and is suitable for projects that require rapid data transfer.

Azure Data Box

The Azure Data Box is a rugged, tamper-resistant device designed for large-scale data transfers. With a 100TB capacity, it supports data transfer rates of up to 1.5GB/s, making it suitable for projects involving significant amounts of data.

Azure Data Box Heavy

Designed for massive data transfer projects, the Data Box Heavy has a 1PB capacity and supports data transfer rates of up to 40GB/s. This device is ideal for large enterprises looking to move vast amounts of data to the cloud.

Azure Data Box Gateway

The Azure Data Box Gateway is a virtual appliance that enables data transfer from on-premises environments to Azure Blob storage. This appliance is suitable for users who require a continuous, incremental data transfer solution to the cloud.

Azure Data Box Edge

The Azure Data Box Edge is a physical appliance that combines data transfer and edge computing capabilities. This device can process and analyze data locally before transferring it to the cloud, making it suitable for scenarios where real-time data processing is essential.

Key Factors Impacting Costs

When considering Azure Data Box, it’s crucial to understand the key factors that influence costs:

Device Usage

Azure Data Box devices are available on a pay-as-you-go basis, with pricing depending on the device type and duration of usage. When planning a data transfer project, it’s essential to select the most suitable device based on the project’s data volume and timeframe.

Data Transfer

While data transfer into Azure is typically free, data transfer out of Azure incurs charges. Depending on the data volume and frequency of transfers, these costs can significantly impact the overall expenses of a project.

Storage

Azure offers various storage options, including Blob Storage, File Storage, and Data Lake Storage. Each storage option has its pricing structure, with factors such as redundancy, access tier, and retention period affecting the costs.

Egress Fees

When transferring data out of Azure, egress fees may apply. These fees are based on the amount of data transferred and vary depending on the geographical region.

Data Processing

For scenarios involving Azure Data Box Edge, additional costs may be associated with data processing and analysis at the edge. These costs depend on the complexity and volume of the data being processed.

Azure DataBox Best Practices

To ensure a successful data transfer project with Azure Data Box, consider the following best practices:

Assess Your Data Transfer Needs

Before selecting an Azure Data Box device, thoroughly assess your data transfer requirements. Consider factors such as data volume, transfer speed, and project timeline to choose the most suitable device for your needs.

Data Compression

Compressing data before transferring it to Azure Data Box can help save time and reduce storage costs. Use efficient data compression algorithms to minimize data size without compromising data integrity.

Secure Data Transfer

Azure Data Box devices use encryption to protect data during transit and at rest. However, it’s essential to implement additional security measures, such as data access controls and data classification policies, to ensure the highest level of security for your data.

Monitor and Optimize

Continuously monitor the performance of your data transfer process to identify potential bottlenecks and optimize data transfer speeds. Leverage tools like the Azure Blob Storage Cost Estimator and Cloud Storage Manager to gain insights into your storage consumption and optimize costs.

Data Validation

Ensure that the data being transferred is accurate and valid. Implement data validation processes to catch errors and inconsistencies in the data before transferring it to Azure Data Box.

Network Configuration

Optimize your network configuration to maximize data transfer speeds. Factors such as bandwidth, latency, and network topology can significantly impact the efficiency of the data transfer process.

Incremental Data Transfer

For ongoing data transfer projects, consider using incremental data transfer methods to minimize data transfer time and costs. Azure Data Box Gateway and Data Box Edge provide options for continuous, incremental data transfer to Azure Blob storage.

How to Use Azure Data Box

To use Azure Data Box for data transfer, follow these steps:

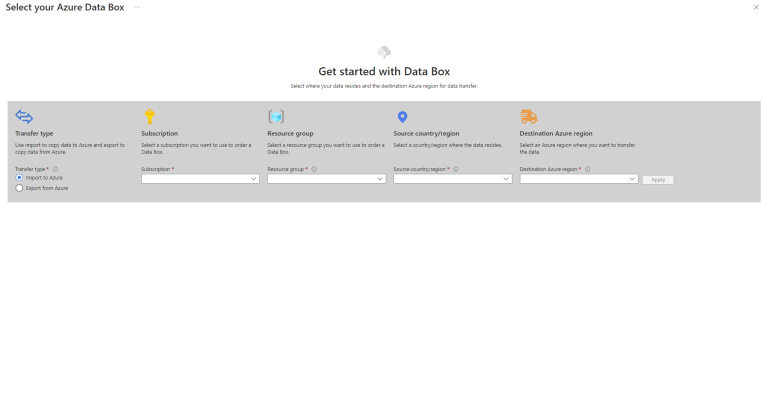

Order an Azure Data Box Device

Based on your data transfer requirements, order the appropriate Azure Data Box device from the Azure portal. Specify the destination Azure storage account where you want to transfer your data.

Receive and Set up the Device

Once you receive the device, connect it to your local network and configure the network settings. Power on the device and follow the setup instructions provided by Microsoft.

Copy Data to the Device

Using the Azure Data Box tools, copy your data to the device. Ensure that the data is properly organized and compressed for efficient data transfer.

Ship the Device

After copying the data, securely pack the device and ship it back to the Azure Data Center. Microsoft will process the device and upload the data to the specified Azure storage account.

Verify Data Transfer

Once the data is uploaded to your Azure storage account, verify the data transfer by comparing the source and destination data. Ensure that all data has been successfully transferred and is accessible in your Azure storage account.

Tradeoffs and Challenges

While Azure Data Box simplifies data transfer to Azure, it’s essential to be aware of the tradeoffs and challenges involved:

Limited Availability

Azure Data Box devices are available only in select regions, which may limit the service’s accessibility for some users. Check the availability of Azure Data Box devices in your region before planning a data transfer project.

Data Transfer Time

Data transfer time can vary depending on the device type, data volume, and network speed. While Azure Data Box devices are designed for high-speed data transfer, some projects may still require a significant amount of time to complete.

Device Handling

Azure Data Box devices are physical devices that require proper handling during shipping and setup. Mishandling can lead to data loss or device damage, impacting the success of your data transfer project.

Data Security

Though Azure Data Box devices use encryption to protect data during transit and at rest, ensuring data security throughout the entire data transfer process is crucial. Implementing additional security measures, such as data access controls and data classification policies, is necessary to guarantee the security of your data.

Data Transfer Costs

While Azure Data Box enables efficient data transfer, it’s essential to consider the overall costs associated with the data transfer process. Factors such as device usage fees, storage costs, and egress fees can impact the total project cost. Comparing the costs of using Azure Data Box with alternative data transfer methods can help determine the most cost-effective solution for your needs.

Network Configuration and Bandwidth

Optimizing your network configuration and ensuring sufficient bandwidth are essential to achieve the maximum data transfer speeds offered by Azure Data Box devices. Network limitations, such as low bandwidth or high latency, can negatively impact the efficiency of the data transfer process.

Importance of Considering the Impact on Data Transfer Decisions

When making decisions about transferring data to Azure, it’s vital to consider the impact of various factors on the overall success and cost of your project. Understanding the tradeoffs and challenges involved in using Azure Data Box, as well as considering alternative data transfer methods, can help you make informed decisions that best meet your needs and budget.

Data Migration Strategy

Developing a comprehensive data migration strategy is crucial for a successful data transfer project. This strategy should include an assessment of data transfer needs, selection of the most suitable Azure Data Box device, and a timeline for the data transfer process.

Cost Management

Understanding and managing the costs associated with Azure Data Box and Azure storage services are essential for optimizing expenses. Utilizing tools such as the Azure Blob Storage Cost Estimator and Cloud Storage Manager can provide valuable insights into storage costs and usage trends, helping businesses save money on their Azure Storage.

Compliance and Regulations

When transferring data to Azure, businesses must ensure compliance with industry-specific regulations and data protection laws. Understanding the requirements of these regulations and implementing appropriate measures to maintain compliance is essential for a successful data transfer project.

Disaster Recovery and Business Continuity

As part of a comprehensive data transfer strategy, businesses should consider the impact of data migration on disaster recovery and business continuity plans. Ensuring that data remains accessible and recoverable during and after the data transfer process is crucial for minimizing downtime and maintaining business operations.

Conclusion

Azure Data Box is an efficient and secure solution for transferring large volumes of data to Azure cloud storage. By understanding the key factors impacting costs, following best practices, and considering the tradeoffs and challenges associated with Azure Data Box, businesses can successfully transfer their data to Azure while optimizing costs. Utilizing tools like the Azure Blob Storage Cost Estimator and Cloud Storage Manager can further enhance the visibility and management of your Azure storage, ultimately saving money and improving your overall cloud storage experience.

by Mark | Apr 12, 2023 | Azure, Azure Blobs, Azure Disks, Azure FIles, Azure Queues, Azure Tables, Blob Storage, Cloud Computing, Cloud Storage, Security, Storage Accounts

In today’s fast-paced and technology-driven world, cloud computing has become an essential component of modern business operations. Microsoft Azure, a leading cloud platform, offers a wide range of services and tools to help organizations manage their infrastructure efficiently. One crucial aspect of managing Azure resources is the Azure Resource Group, a logical container for resources deployed within an Azure subscription. In this comprehensive guide, we’ll explore the best practices for organizing Azure Resource Groups, enabling you to optimize your cloud infrastructure, streamline management, and enhance the security and compliance of your resources.

Why Organize Your Azure Resource Groups?

Understanding the importance of organizing Azure Resource Groups is essential to leveraging their full potential. Efficient organization of your resource groups can lead to numerous benefits that impact various aspects of your cloud infrastructure management:

- Improved resource management: Proper organization of Azure Resource Groups allows you to manage your resources more effectively, making it easier to deploy, monitor, and maintain your cloud infrastructure. This can result in increased productivity and more efficient use of resources.

- Simplified billing and cost tracking: When resources are organized systematically, it becomes simpler to track and allocate costs associated with your cloud infrastructure. This can lead to better budgeting, cost optimization, and overall financial management.

- Enhanced security and compliance: Organizing your Azure Resource Groups with security and compliance in mind can help mitigate potential risks and ensure the protection of your resources. This involves implementing access controls, isolating sensitive resources, and monitoring for security and compliance using Azure Policy.

- Streamlined collaboration among teams: An organized Azure Resource Group structure promotes collaboration between teams, making it easier for them to work together on projects and share resources securely.

Now that we understand the significance of organizing Azure Resource Groups let’s dive into the best practices that can help you achieve these benefits.

Define a Consistent Naming Convention

Creating a consistent naming convention for your resource groups is the first step towards effective organization. This practice will enable you and your team to quickly identify and manage resources within your Azure environment. In creating a naming convention, you should consider incorporating the following information:

- Project or application name: Including the project or application name in your resource group name ensures that resources are easily associated with their corresponding projects or applications. This can be especially helpful when working with multiple projects or applications across your organization.

- Environment (e.g., dev, test, prod): Specifying the environment (e.g., development, testing, or production) in your resource group name allows you to quickly differentiate between resources used for various stages of your project lifecycle. This can help you manage resources more efficiently and reduce the risk of accidentally modifying or deleting the wrong resources.

- Geographic location: Including the geographic location in your resource group name can help you manage resources based on their physical location, making it easier to comply with regional regulations and optimize your cloud infrastructure for performance and latency.

- Department or team name: Adding the department or team name to your resource group name can improve collaboration between teams, ensuring that resources are easily identifiable and accessible by the appropriate team members.

Group Resources Based on Lifecycle and Management

Another essential practice in organizing Azure Resource Groups is to group resources based on their lifecycle and management requirements. This approach can help you better manage and maintain your cloud infrastructure by simplifying resource deployment, monitoring, and deletion. To achieve this, consider the following:

- Group resources with similar lifecycles: Resources that share similar lifecycles, such as development, testing, and production resources, shouldbe grouped together within a resource group. This approach allows you to manage these resources more effectively by simplifying deployment, monitoring, and maintenance tasks.

- Group resources based on ownership and responsibility: Organizing resources according to the teams or departments responsible for their management can help improve collaboration and access control. By grouping resources in this manner, you can ensure that the appropriate team members have access to the necessary resources while maintaining proper security and access controls.

- Group resources with similar management requirements: Resources that require similar management tasks or share common dependencies should be grouped together. This can help streamline resource management and monitoring, as well as ensure that resources are consistently maintained and updated.

Use Tags to Enhance Organization

Tags are a powerful tool for organizing resources beyond the scope of resource groups. By implementing a consistent tagging strategy, you can further enhance your cloud infrastructure’s organization and management. Some of the key benefits of using tags include:

- Filter and categorize resources for reporting and analysis: Tags can be used to filter and categorize resources based on various criteria, such as project, environment, or department. This can help you generate more accurate reports and analyses, enabling you to make more informed decisions about your cloud infrastructure.

- Streamline cost allocation and tracking: Tags can be used to associate resources with specific cost centers or projects, making it easier to allocate and track costs across your organization. This can help you optimize your cloud infrastructure costs and better manage your budget.

- Improve access control and security: Tags can be used to implement access controls and security measures, such as restricting access to resources based on a user’s role or department. This can help you maintain a secure and compliant cloud infrastructure by ensuring that users only have access to the resources they need.

Design for Security and Compliance

Organizing Azure Resource Groups with security and compliance in mind can help minimize risks and protect your resources. To achieve this, consider the following best practices:

- Isolate sensitive resources in dedicated resource groups: Sensitive resources, such as databases containing personal information or mission-critical applications, should be isolated in dedicated resource groups. This can help protect these resources by limiting access and reducing the risk of unauthorized access or modification.

- Implement role-based access control (RBAC) for resource groups: RBAC allows you to grant specific permissions to users based on their roles, ensuring that they only have access to the resources necessary to perform their job duties. Implementing RBAC for resource groups can help you maintain a secure and compliant cloud infrastructure.

- Monitor resource groups for security and compliance using Azure Policy: Azure Policy is a powerful tool for monitoring and enforcing compliance within your cloud infrastructure. By monitoring your resource groups using Azure Policy, you can identify and remediate potential security and compliance risks before they become critical issues.

Leverage Azure Management Groups

Azure Management Groups offer a higher-level organization structure for managing your Azure subscriptions and resource groups. Using management groups can help you achieve the following benefits:

- Enforce consistent policies and access control across multiple subscriptions: Management groups allow you to define and enforce policies and access controls across multiple Azure subscriptions, ensuring consistent security and compliance across your entire cloud infrastructure.

- Simplify governance and compliance at scale: As your organization grows and your cloud infrastructure expands, maintaining governance and compliance can become increasingly complex. Management groups can help you simplify this process by providing a centralized location for managing policies and access controls across your subscriptions and resource groups.

- Organize subscriptions and resource groups based on organizational structure: Management groups can be used to organize subscriptions and resource groups according to your organization’s structure, such as by department, team, or project. This can help you manage resources more efficiently and ensure that the appropriate team members have access to the necessary resources.

Azure Resource Groups FAQs

| FAQ Question |

FAQ Answer |

What is a resource group in Azure?

|

A resource group in Azure is a logical container for resources that are deployed within an Azure subscription. It helps you organize and manage resources based on their lifecycle and their relationship to each other. |

What is an example of a resource group in Azure?

|

An example of a resource group in Azure could be one that contains all the resources related to a specific web application, including web app services, databases, and storage accounts. |

What are the different types of resource groups in Azure?

|

There aren’t specific “types” of resource groups in Azure. However, resource groups can be organized based on various factors, such as project, environment (e.g., dev, test, prod), geographic location, and department or team. |

Why use resource groups in Azure?

|

Resource groups in Azure provide a way to organize and manage resources efficiently, simplify billing and cost tracking, enhance security and compliance, and streamline collaboration among teams. |

What are the benefits of resource groups?

|

The benefits of resource groups include improved resource management, simplified billing and cost tracking, enhanced security and compliance, and streamlined collaboration among teams. |

What is the role of a resource group?

|

The role of a resource group is to provide a logical container for resources in Azure, allowing you to organize and manage resources based on their lifecycle and their relationship to each other. |

What are the 3 types of Azure roles?

|

The three types of Azure roles are Owner, Contributor, and Reader. These roles represent different levels of access and permissions within Azure resources and resource groups. |

What are the four main resource groups?

|

The term “four main resource groups” is not specific to Azure. However, you can organize your resource groups based on various factors, such as project, environment, geographic location, and department or team. |

What best describes a resource group?

|

A resource group is a logical container for resources deployed within an Azure subscription, allowing for the organization and management of resources based on their lifecycle and their relationship to each other. |

What is an example of a resource group?

|

An example of a resource group could be one that contains all the resources related to a specific web application, including web app services, databases, and storage accounts. |

What are the types of resource group?

|

There aren’t specific “types” of resource groups. However, resource groups can be organized based on various factors, such as project, environment (e.g., dev, test, prod), geographic location, and department or team. |

What is the difference between group and resource group in Azure?

|

The term “group” in Azure typically refers to an Azure Active Directory (AAD) group, which is used for managing access to resources at the user level. A resource group, on the other hand, is a logical container for resources deployed within an Azure subscription. |

Where is Azure resource Group?

|

Azure Resource Groups are part of the Azure Resource Manager (ARM) service, which is available within the Azure Portal and can also be accessed via Azure CLI, PowerShell, and REST APIs. |

What is Azure resource Group vs AWS?

|

Azure Resource Groups are a feature of Microsoft Azure, while AWS is Amazon’s cloud platform. AWS has a similar concept called AWS Resource Groups, which helps users organize and manage AWS resources. |

What is the equivalent to an Azure resource Group in AWS?

|

The equivalent of an Azure Resource Group in AWS is the AWS Resource Group, which also helps users organize and manage AWS resources based on their lifecycle and their relationship to each other. |

Additional Azure Resource Group Best Practices

In addition to the best practices for organizing Azure Resource Groups previously mentioned, consider these additional tips to further improve your resource management:

Implement Consistent Naming Conventions

Adopting a consistent naming convention for your Azure Resource Groups and resources is crucial for improving the manageability and discoverability of your cloud infrastructure. A well-defined naming convention can help you quickly locate and identify resources based on their names. When creating your naming convention, consider factors such as resource type, environment, location, and department or team.

Regularly Review and Update Resource Groups

Regularly reviewing and updating your Azure Resource Groups is essential to maintaining an organized and efficient cloud infrastructure. As your organization’s needs evolve, you may need to reorganize resources, create new resource groups, or update access controls and policies. Schedule periodic reviews to ensure that your resource groups continue to meet your organization’s needs and adhere to best practices.

Document Your Resource Group Strategy

Documenting your resource group strategy, including your organization’s best practices, naming conventions, and policies, can help ensure consistency and clarity across your team. This documentation can serve as a reference for current and future team members, helping them better understand your organization’s approach to organizing and managing Azure resources.

Azure Resource Groups Conclusion

Effectively organizing Azure Resource Groups is crucial for efficiently managing your cloud infrastructure and optimizing your resources. By following the best practices outlined in this comprehensive guide, you can create a streamlined, secure, and compliant environment that supports your organization’s needs. Don’t underestimate the power of a well-organized Azure Resource Group structure – it’s the foundation for success in your cloud journey. By prioritizing the organization of your resource groups and implementing the strategies discussed here, you’ll be well-equipped to manage your cloud infrastructure and ensure that your resources are used to their fullest potential.

by Mark | Apr 3, 2023 | Azure, Azure Blobs, Azure Disks, Azure FIles, Cloud Storage, Cloud Storage Manager, Storage Accounts

AZCopy Introduction

In today’s data-driven world, the ability to efficiently and effectively manage vast amounts of data is crucial. As businesses increasingly rely on cloud services to store and manage their data, tools that can streamline data transfer processes become indispensable. AZCopy is one such powerful tool that, when combined with Azure Storage, can greatly simplify data management tasks while maintaining optimal performance. This article aims to provide a comprehensive guide on using AZCopy with Azure Storage, enabling you to harness the full potential of these powerful technologies.

AZCopy is a command-line utility designed by Microsoft to provide a high-performance, multi-threaded solution for transferring data to and from Azure Storage services. It is capable of handling large-scale data transfers with ease, thanks to its support for parallelism and resumable file transfers. Furthermore, AZCopy supports various data types, such as Azure Blob Storage, Azure Files, and Azure Table Storage, making it a versatile tool for managing different types of data within the Azure ecosystem.

Data management in the cloud is vital for businesses, as it allows for efficient storage, retrieval, and analysis of information. This, in turn, enables organizations to make data-driven decisions, optimize their operations, and drive innovation. Azure Storage is a popular choice for cloud-based storage, offering a range of services, including Blob storage, File storage, Queue storage, and Table storage. These services cater to various data storage needs, such as unstructured data, file shares, messaging, and NoSQL databases. By using Azure Storage, businesses can benefit from its scalability, durability, security, and cost-effectiveness, which are essential features for modern data storage solutions.

This article serves as a guide to help you harness the power of AZCopy with Azure Storage by providing step-by-step instructions for setting up your environment, using AZCopy for various data transfer scenarios, and troubleshooting common issues that may arise. We will begin by exploring what AZCopy is and providing an overview of Azure Storage. Next, we will delve into setting up your environment, including creating an Azure Storage account, installing AZCopy on your preferred platform, and configuring AZCopy for authentication.

Once your environment is set up, we will discuss various use cases for AZCopy with Azure Storage, such as uploading data to Azure Storage, downloading data from Azure Storage, copying data between Azure Storage accounts, and synchronizing data between local storage and Azure Storage. Step-by-step guides will be provided for each of these scenarios, helping you effectively use AZCopy to manage your data. Additionally, we will offer tips for optimizing AZCopy’s performance, ensuring that you get the most out of this powerful utility.

Finally, we will address troubleshooting common issues that may arise while using AZCopy, such as handling failed transfers, resuming interrupted transfers, dealing with authentication errors, and addressing performance issues. This comprehensive guide will equip you with the knowledge and skills needed to efficiently manage your data using AZCopy and Azure Storage, allowing you to take full advantage of these powerful tools.

In summary, the purpose of this article is to provide a comprehensive guide on using AZCopy with Azure Storage, enabling you to harness the full potential of these powerful technologies. By following this guide, you will be able to efficiently and effectively manage your data in the cloud, leading to improved data-driven decision-making, optimized operations, and increased innovation within your organization.

What is AZCopy?

AZCopy is a command-line utility developed by Microsoft to facilitate fast and reliable data transfers to and from Azure Storage services. Designed with performance and versatility in mind, AZCopy simplifies the process of managing data within the Azure ecosystem, catering to the needs of developers, IT professionals, and organizations of various sizes.

Definition of AZCopy

AZCopy is a high-performance, multi-threaded data transfer tool that supports parallelism and resumable file transfers, making it ideal for handling large-scale data transfers. It allows users to transfer data between local storage and Azure Storage, as well as between different Azure Storage accounts. AZCopy is specifically designed for optimal performance when working with Azure Blob Storage, Azure Files, and Azure Table Storage.

Key features

- High-performance: AZCopy is built for speed, utilizing multi-threading and parallelism to achieve high transfer rates. This enables users to transfer large amounts of data quickly and efficiently.

- Multi-threaded: By supporting multi-threading, AZCopy can simultaneously perform multiple file transfers, leading to reduced transfer times and increased efficiency.

- Resumable file transfers: In case of interruptions during a transfer, AZCopy is capable of resuming the process from where it left off. This feature minimizes the need to restart the entire transfer process, saving time and reducing the likelihood of data corruption.

- Supports various data types: AZCopy is compatible with multiple Azure Storage services, including Azure Blob Storage, Azure Files, and Azure Table Storage. This versatility allows users to manage a variety of data types using a single utility.

Supported platforms

AZCopy is available on several platforms, ensuring that users can easily access the utility on their preferred operating system:

- Windows: AZCopy can be installed on Windows operating systems, providing a familiar environment for users who prefer working with Windows.

- Linux: For users who work with Linux-based systems, AZCopy is available as a cross-platform utility, allowing for seamless integration with their existing workflows.

- macOS: macOS users can also take advantage of AZCopy, as it is available for installation on Apple’s operating system, ensuring compatibility with a wide range of devices and environments.

In the next section, we will explore Azure Storage, providing an overview of the various storage services it offers, as well as the benefits of using Azure Storage for your data management needs.

Azure Storage Overview

Azure Storage is a comprehensive cloud storage solution offered by Microsoft as part of its Azure suite of services. It provides scalable, durable, and secure storage options for various types of data, catering to the needs of businesses and organizations of all sizes. In this section, we will briefly describe Azure Storage and its core services, as well as the benefits of using Azure Storage for your data management needs.

Brief description of Azure Storage

Azure Storage is a highly available and massively scalable cloud storage solution designed to handle diverse data types and storage requirements. It offers a range of storage services, including Blob storage, File storage, Queue storage, and Table storage. These services are designed to address different data storage needs, such as unstructured data, file shares, messaging, and NoSQL databases, enabling organizations to store and manage their data effectively and securely.

Storage services

- Blob storage: Azure Blob storage is designed for storing large amounts of unstructured data, such as text, images, videos, and binary data. It is highly scalable and can handle millions of requests per second, making it ideal for storing and serving data for big data, analytics, and content delivery purposes.

- File storage: Azure File storage is a managed file share service that uses the SMB protocol, allowing for seamless integration with existing file share infrastructure. It is ideal for migrating on-premises file shares to the cloud, providing shared access to files, and enabling lift-and-shift scenarios for applications that rely on file shares.

- Queue storage: Azure Queue storage is a messaging service that enables communication between components of a distributed application. It facilitates asynchronous message passing, decoupling the components, and allowing for better scalability and fault tolerance.

- Table storage: Azure Table storage is a NoSQL database service designed for storing structured, non-relational data. It is highly scalable and provides low-latency access to data, making it suitable for storing large volumes of data that do not require complex queries or relationships.

Benefits of using Azure Storage

- Scalability: Azure Storage is designed to scale on-demand, allowing you to store and manage data without worrying about capacity limitations. This ensures that your storage infrastructure can grow alongside your business, meeting your changing needs over time.

- Durability: Azure Storage offers built-in data replication and redundancy, ensuring that your data is protected and available even in the event of hardware failures or other issues. This provides peace of mind and ensures the continuity of your operations.

- Security: Azure Storage includes various security features, such as data encryption at rest and in transit, role-based access control, and integration with Azure Active Directory. These features help you protect your data and comply with industry regulations and standards.

- Cost-effectiveness: Azure Storage offers flexible pricing options, allowing you to choose the storage solution that best fits your budget and requirements. By leveraging Azure’s pay-as-you-go model, you can optimize your storage costs based on your actual usage, rather than over-provisioning to account for potential growth.

In the following sections, we will guide you through setting up your environment to work with AZCopy and Azure Storage, as well as provide step-by-step instructions for using AZCopy for various data transfer scenarios.

Setting Up Your Environment

Before you can start using AZCopy with Azure Storage, you will need to set up your environment by creating an Azure Storage account, installing AZCopy on your preferred platform, and configuring AZCopy for authentication. This section will walk you through these steps to ensure your environment is ready for data transfers.

Creating an Azure Storage account

- Sign in to the Azure portal (https://portal.azure.com/) with your Microsoft account. If you do not have an account, you can sign up for a free trial.

- Click on the “Create a resource” button in the left-hand menu.

- In the search bar, type “Storage account” and select it from the list of results.

- Click the “Create” button to start the process of creating a new storage account.

- Fill in the required information, such as subscription, resource group, storage account name, location, and performance tier. Make sure to choose the appropriate redundancy and access tier options based on your requirements.

- Click “Review + create” to review your settings, then click “Create” to create your Azure Storage account. The deployment process may take a few minutes.

Further guidance on setting up an Azure Storage Account

Installing AZCopy

AZCopy can be installed on Windows, Linux, and macOS platforms. Follow the instructions for your preferred platform:

- Windows: a. Download the latest version of AZCopy for Windows from the official Microsoft website (https://aka.ms/downloadazcopy-v10-windows). b. Extract the contents of the downloaded ZIP file to a directory of your choice. c. Add the directory containing the extracted AZCopy executable to your system’s PATH environment variable.

- Linux: a. Download the latest version of AZCopy for Linux from the official Microsoft website (https://aka.ms/downloadazcopy-v10-linux). b. Extract the contents of the downloaded TAR file to a directory of your choice. c. Add the directory containing the extracted AZCopy executable to your system’s PATH environment variable.

- macOS: a. Download the latest version of AZCopy for macOS from the official Microsoft website (https://aka.ms/downloadazcopy-v10-mac). b. Extract the contents of the downloaded ZIP file to a directory of your choice. c. Add the directory containing the extracted AZCopy executable to your system’s PATH environment variable.

Configuring AZCopy

Obtaining storage account keys or SAS tokens:

To authenticate with your Azure Storage account, you will need either the storage account key or a Shared Access Signature (SAS) token. You can obtain these credentials from the Azure portal:

a. Navigate to your Azure Storage account in the Azure portal.

b. In the left-hand menu, click “Access keys” to obtain the storage account key, or click “Shared access signature” to generate a SAS token.

c. Copy the desired credential for use with AZCopy.

Setting up authentication:

AZCopy supports authentication using either the storage account key or a SAS token. To set up authentication, use the following command, replacing “ACCOUNT_NAME” and “ACCOUNT_KEY” or “SAS_TOKEN” with your actual credentials:

- Using the storage account key: azcopy login –account-name ACCOUNT_NAME –account-key ACCOUNT_KEY

- Using a SAS token:azcopy login –sas-token “SAS_TOKEN”

With your environment set up, you can now proceed to use AZCopy with Azure Storage for various data transfer scenarios, as described in the next sections.

Using AZCopy with Azure Storage

Now that your environment is set up, you can start using AZCopy to manage your data in Azure Storage. In this section, we will discuss common use cases for AZCopy with Azure Storage and provide step-by-step guides for each scenario.

Step-by-step guides

Uploading files to Blob storage:

a. Open a command prompt or terminal window. b. Use the following command, replacing “SOURCE_PATH” with the path to the local file or directory you want to upload, and “DESTINATION_URL” with the URL of the target Blob container in your Azure Storage account:

azcopy copy “SOURCE_PATH” “DESTINATION_URL” –recursive

Note: Use the --recursive flag to upload all files and subdirectories within a directory. Remove the flag if you are uploading a single file.

Downloading files from Blob storage:

Open a command prompt or terminal window. b. Use the following command, replacing “SOURCE_URL” with the URL of the Blob container or Blob you want to download, and “DESTINATION_PATH” with the path to the local directory where you want to save the downloaded files:

azcopy copy “SOURCE_URL” “DESTINATION_PATH” –recursive

Note: Use the –recursive flag to download all files and subdirectories within a Blob container. Remove the flag if you are downloading a single Blob.

Copying files between Azure Storage accounts:

a. Open a command prompt or terminal window. b. Use the following command, replacing “SOURCE_URL” with the URL of the source Blob container or Blob, and “DESTINATION_URL” with the URL of the target Blob container in the destination Azure Storage account:

azcopy copy “SOURCE_URL” “DESTINATION_URL” –recursive

Note: Use the –recursive flag to copy all files and subdirectories within a Blob container. Remove the flag if you are copying a single Blob.

Synchronizing local files with Azure Storage:

a. Open a command prompt or terminal window. b. Use the following command, replacing “SOURCE_PATH” with the path to the local directory you want to synchronize, and “DESTINATION_URL” with the URL of the target Blob container in your Azure Storage account:

azcopy sync “SOURCE_PATH” “DESTINATION_URL” –recursive

This command will synchronize the contents of the local directory with the Blob container, uploading new or updated files and deleting Blob files that are no longer present in the local directory.

Tips for optimizing AZCopy performance

Adjusting the number of concurrent operations:

AZCopy’s performance can be influenced by the number of concurrent operations it performs. You can adjust this number using the --cap-mbps flag in your AZCopy commands, replacing “X” with the desired number of megabits per second: azcopy copy “SOURCE_PATH” “DESTINATION_URL” –recursive –cap-mbps X

Using a response file:

For complex AZCopy commands or scenarios where you need to specify multiple flags, you can use a response file to store your command parameters. Create a text file containing your AZCopy command flags, one per line, then use the @ symbol followed by the response file path in your AZCopy command: azcopy copy “SOURCE_PATH” “DESTINATION_URL” @response_file_path

Managing transfer logs:

AZCopy generates log files during transfers to help you monitor progress and troubleshoot issues. By default, log files are created in the user’s home directory, but you can specify a custom log location using the --log-location flag: azcopy copy “SOURCE_PATH” “DESTINATION_URL” –recursive –log-location “CUSTOM_LOG_PATH”

Replace “CUSTOM_LOG_PATH” with the desired path for the log files.

Handling large files:

For large files, AZCopy can be configured to use the --block-size-mb flag to adjust the block size used during transfers. Larger block sizes can improve performance but may consume more memory. Replace “Y” with the desired block size in megabytes: azcopy copy “SOURCE_PATH” “DESTINATION_URL” –recursive –block-size-mb Y

Monitoring AZCopy transfers:

You can monitor the progress of your AZCopy transfers using the –status flag followed by the job ID: azcopy jobs show –job-id “JOB_ID”

Replace “JOB_ID” with the job ID displayed in the command prompt or terminal window during the transfer.

In conclusion, AZCopy is a powerful and versatile utility for managing data transfers to and from Azure Storage. By familiarizing yourself with its features and following the step-by-step guides provided in this article, you can efficiently manage your data in Azure Storage and optimize your cloud storage workflows.

Advanced AZCopy Features and Use Cases

In addition to the basic data transfer scenarios covered in the previous sections, AZCopy offers advanced features that can help you further optimize your data management tasks with Azure Storage. In this section, we will discuss these advanced features and provide examples of use cases where they can be particularly beneficial.

Advanced Features

Incremental Copy:

AZCopy supports incremental copy, which allows you to transfer only the modified or new files since the last transfer. This can help save time and bandwidth by avoiding the transfer of unchanged files. To perform an incremental copy, use the --incremental flag:

azcopy copy “SOURCE_PATH” “DESTINATION_URL” –recursive –incremental

Filtering Files:

You can filter files during a transfer based on specific criteria, such as file name patterns, last modified time, or file size. Use the --include-pattern, --exclude-pattern, --include-after, or --exclude-before flags to apply filters:

azcopy copy “SOURCE_PATH” “DESTINATION_URL” –recursive –include-pattern “*.jpg” –exclude-before “2023-01-01T00:00:00Z”

This command will transfer only files with a “.jpg” extension that were modified after January 1, 2023.

Preserving Access Control Lists (ACLs):

When transferring files between Azure Storage accounts, you can preserve the Access Control Lists (ACLs) by using the --preserve-smb-permissions flag for Azure File storage, or the --preserve-smb-info flag for Azure Blob storage:

azcopy copy “SOURCE_URL” “DESTINATION_URL” –recursive –preserve-smb-permissions

Advanced Use Cases

- Backup and Disaster Recovery: AZCopy can be used to create backups of your local data in Azure Storage or to replicate data between Azure Storage accounts for disaster recovery purposes. By leveraging AZCopy’s advanced features, such as incremental copy and file filtering, you can optimize your backup and recovery processes to save time and storage costs.

- Data Migration: AZCopy is a valuable tool for migrating data to or from Azure Storage, whether you are moving data between on-premises and Azure, or between different Azure Storage accounts or regions. AZCopy’s high-performance capabilities and support for resumable transfers help ensure a smooth and efficient migration process.

- Data Archiving: If you need to archive data for long-term retention, AZCopy can help transfer your data to Azure Blob storage, where you can take advantage of Azure’s cost-effective archiving and tiering options, such as Cool and Archive storage tiers.