by Mark | Jan 18, 2023 | Azure Blobs, Blob Storage, Cloud Computing, How To, Storage Accounts

Azure Storage Authentication Options

Azure Storage Authentication is the process of verifying the identity of a client that is requesting access to an Azure storage account. Azure Storage supports several authentication options that can be used to secure access to storage accounts, such as:

- Shared Key Authentication: This method of authentication uses a shared key that is known to both the client and the storage account to sign request headers.

- Shared Access Signature (SAS) Authentication: This method of authentication uses a shared access signature (SAS) token to provide restricted access to a storage account. A SAS token can be generated for a specific resource or set of resources within a storage account and can be used to grant read, write, or delete access to that resource.

- Azure Active Directory (AAD) Authentication: This method of authentication allows you to secure access to a storage account using Azure AD. By connecting a storage account to Azure AD, you can use Azure AD authentication to grant access to specific users or applications that are already authenticated with Azure AD.

- OAuth Authentication: This method of authentication allows you to authenticate with Azure Storage using an OAuth 2.0 Bearer Token. The token is passed in the Authorization header and is verified by Azure Storage.

- Token-based Authentication: This method of authentication is an advanced authentication method and includes authentication with SAS, OAuth 2.0 bearer tokens and JSON Web Tokens (JWT)

Choosing the best authentication option depends on your requirements such as security, ease of use and ease of integration with existing systems or platforms. For example, for testing or development purposes, Shared Key authentication can be sufficient and easier to implement, but for production environments that requires high level of security or integration with enterprise environments you may prefer to use Azure AD or OAuth.

How to connect your Azure Storage Accounts to your On-Premise Active Directory

Connecting a storage account to an on-premises Active Directory (AD) allows you to secure access to the storage account using on-premises AD authentication. This can be useful in scenarios where you want to provide access to the storage account to a specific group of users or applications that are already authenticated with your on-premises AD.

Here’s an overview of the process for connecting a storage account to an on-premises AD:

- Create a Domain Name System (DNS) alias: To connect to the on-premises AD, you will need to create a DNS alias that points to the on-premises AD. This can be done by creating a CNAME record in your DNS server.

- Configure the storage account to use AD authentication: In the Azure portal, go to the storage account settings and enable AD authentication for the storage account. You will need to provide the DNS alias that you created earlier and specify the domain name of your on-premises AD.

- Create a group in the on-premises AD: To grant access to the storage account, you will need to create a group in your on-premises AD. This group will be used to manage access to the storage account.

- Assign the storage Blob Data Contributor role to the group: To grant access to the storage account, you will need to assign the storage Blob Data Contributor role to the group. This role allows the members of the group to manage blobs in the storage account

- Add users or computer to the group: To grant access to storage account you should add users or computer to the group you created in step 3

It’s worth to mention that this process requires that you have your own domain controller and DNS server, and that your azure storage account and your on-premise network should be connected through a VPN or ExpressRoute.

Also, it would require an ADFS or other third party solution to facilitate the integration and trust relationship between on-premises AD and Azure AD.

by Mark | Jan 15, 2023 | Azure, Cloud Computing, Security, Storage Accounts

Azure SFTP Service with Azure Storage overview

Azure SFTP (Secure File Transfer Protocol) is a service provided by Microsoft Azure that enables you to transfer files securely to and from Azure storage. The service is built on the SFTP protocol, which provides a secure way to transfer files over the internet by encrypting both the data in transit and the data at rest. Azure SFTP allows you to easily automate the transfer of large amounts of data such as backups and log files, to and from your Azure storage account. Additionally, it allows to set permissions and access control to limit access to specific users or groups.

Azure SFTP Service limitations and guidance

To use Azure SFTP, you will first need to create an Azure storage account. Once you have a storage account set up, you can create an SFTP server by going to the Azure portal and selecting the storage account you want to use. In the settings of the storage account, there is an option to create a new SFTP server.

Once the SFTP server is created, you will be provided with a unique hostname and port to connect to the server. To connect to the server, you will need to use an SFTP client, such as WinSCP or FileZilla. You will also need to provide your SFTP server credentials, which consist of a username and password.

Once you are connected to the SFTP server, you will be able to transfer files to and from your Azure storage account. The SFTP server will automatically create a new container within your storage account to store the files. You can also create new folders within the container to organize your files.

One of the benefits of using Azure SFTP is that it allows you to easily automate the transfer of files. You can use a tool like Azure Data Factory to schedule file transfers on a regular basis. Additionally, you can use Azure Automation to automate the creation of SFTP servers, which can save time and reduce the chances of human error.

Another benefit of using Azure SFTP is that it allows you to access your files securely from anywhere. The SFTP server uses industry standard encryption to protect your data in transit and at rest. Additionally, you can use Azure Role-Based Access Control (RBAC) to limit access to your SFTP server and storage account to specific users or groups.

There are some limitations to Azure SFTP that you should be aware of before using it. One limitation is that the SFTP server only supports a single concurrent connection per user. This means that if multiple people need to access the SFTP server at the same time, they will need to use different credentials. Additionally, Azure SFTP currently does not support SFTP version 6 or later, and it will not support it in near future.

Another limitation of Azure SFTP is that it does not currently support customization of SFTP server settings, such as the ability to change the default port or configure SSH options. Additionally, It does not support integration with other Azure services, such as Azure Monitor or Azure Security Center, for monitoring or logging of SFTP activity.

In conclusion, Azure SFTP is a powerful service that allows you to securely transfer files to and from Azure storage. It is easy to use, and can be automated to save time and reduce the chances of human error. It allows you to access your files securely from anywhere, and it uses industry standard encryption to protect your data in transit and at rest. However, it does have some limitations, such as not supporting multiple concurrent connections per user and not supporting customization of SFTP server settings.

How do you connect to Azure SFTP Service?

To connect to Azure SFTP Service, you will need to perform the following steps:

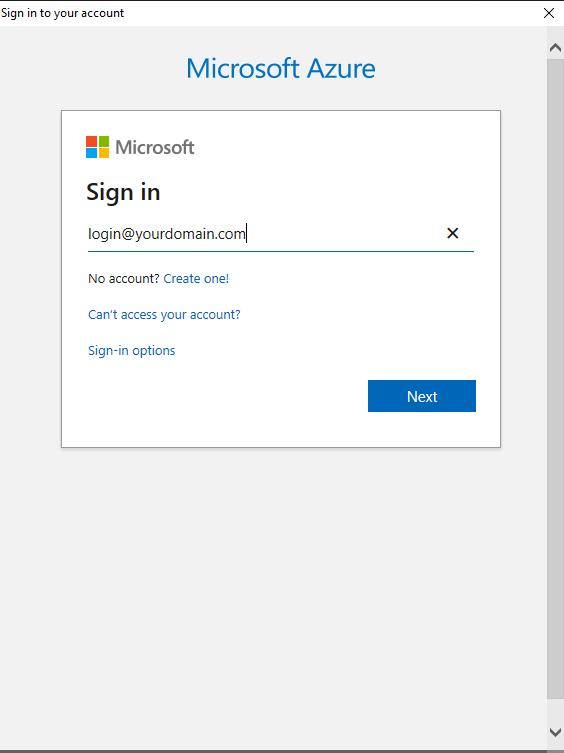

- Create an Azure storage account: You will need a storage account to create an SFTP server. You can create a storage account in the Azure portal or using Azure CLI or Azure PowerShell.

- Create an SFTP server: Go to the Azure portal, select your storage account, and then select the option to create a new SFTP server. Once the SFTP server is created, you will be provided with a unique hostname and port to connect to the server.

- Install an SFTP client: To connect to the SFTP server, you will need to use an SFTP client such as WinSCP, FileZilla, or Cyberduck.

- Connect to the SFTP server: Use the hostname and port provided in step 2, along with the SFTP server credentials (username and password) to connect to the SFTP server via the SFTP client.

- Transfer files: Once you are connected to the SFTP server, you can transfer files to and from your Azure storage account. By default, the SFTP server will create a new container within your storage account to store the files.

It is also worth mentioning that once you connect to the SFTP server you will have an access to all the capabilities of the SFTP protocol, including creation, deletion, editing, copying and moving of files, as well as folder structure management.

by Mark | Sep 28, 2021 | Cloud Computing, Guest Post

Achieving PCI DSS Compliance for Your Cloud Operations

If you store, process, or transmit cardholder data or sensitive information, you must comply with the Payment Card Industry Data Security Standards (PCI DSS) set by major credit card companies. These security controls, released in 2018, are designed to help prevent, detect, and respond to security issues affecting payment card data. Failure to comply can lead to heavy fines, financial losses, damaged reputation, and lawsuits. But how do you ensure compliance for your cloud operations?

In this article, we’ll discuss the 12 PCI DSS requirements and six goals encapsulated in these standards. However, only seven requirements and four goals are relevant to cloud PCI DSS compliance.

What is PCI DSS Compliance?

PCI DSS is a set of security standards developed by the payment card industry to ensure that all companies that accept, process, store, or transmit credit card information maintain a secure environment. The standards are designed to protect cardholder data from theft and fraud and apply to all organizations that accept payment cards, regardless of size or volume.

Why is PCI DSS Compliance Important?

PCI DSS compliance is critical for any organization that handles credit card information because it helps to prevent data breaches and protects against financial losses, legal liabilities, and damage to the company’s reputation. Additionally, failure to comply with PCI DSS standards can result in costly fines and penalties.

The Challenges of Achieving PCI DSS Compliance in the Cloud

Achieving PCI DSS compliance in the cloud can be challenging due to several factors, including the shared responsibility model between the cloud service provider and the customer, the complexity of the cloud environment, and the lack of visibility and control over the infrastructure.

Understanding Cloud Service Provider Responsibility

In a cloud computing environment, the cloud service provider is responsible for securing the underlying infrastructure, including the physical data centers, servers, and networking components. The customer is responsible for securing their applications, data, and operating systems running on top of the cloud infrastructure. To achieve PCI DSS compliance in the cloud, it is important to understand the respective responsibilities of the cloud service provider and the customer and ensure that each party fulfills their obligations.

Best Practices for Achieving PCI DSS Compliance in the Cloud

To achieve PCI DSS compliance in the cloud, organizations should follow best practices that include:

Implementing a Risk Assessment Process

A risk assessment process should be implemented to identify, assess, and mitigate the risks associated with storing and processing cardholder data in the cloud.

Developing a Cloud Security Policy

A comprehensive cloud security policy should be developed that outlines the roles and responsibilities of all parties involved in the cloud environment and defines the security controls required to achieve PCI DSS compliance.

Securing Cloud Infrastructure

The cloud infrastructure should be secured by implementing security controls such as firewalls, intrusion detection and prevention systems, and access controls.

Protecting Data in the Cloud

Sensitive cardholder data should be protected by implementing encryption, tokenization, and other security measures.

Conducting Regular Audits and Assessments

Regular audits and assessments should be conducted to ensure that the cloud environment remains compliant with PCI DSS standards.

Ensuring Continuous Compliance in the Cloud

Achieving PCI DSS compliance is not a one-time event. It requires continuous monitoring and testing to ensure that the cloud environment remains secure and compliant. Organizations should implement a continuous compliance program that includes regular vulnerability scans, penetration testing, and security audits.

Educating Employees on Cloud Security Best Practices

One of the most significant vulnerabilities in any security system is the human factor. Employees must be trained on cloud security best practices to ensure that they understand their roles and responsibilities in maintaining a secure cloud environment. Training should cover topics such as password management, data protection, and incident response.

Goal: Build and Maintain a Secure Network

Malicious individuals can easily access and steal customer data from payment systems that don’t have secure networks. To achieve this goal, businesses must install and maintain firewall configurations that protect cardholder data. Firewalls are essential to protecting cardholder data, and businesses must ensure that their firewalls can protect all network systems from access by malicious players.

Another requirement under this goal is that businesses should not use vendor-supplied passwords, usernames, and other security parameters as default. Instead, you should change vendor-supplied security parameters immediately after deployment.

Goal: Adopt Strong Access Restriction Measures

Limiting access to cardholder data is critical to protecting sensitive payment details. Such information should only be granted to authorized personnel on a need-to-know basis. All your employees with computer access should use separate, unique IDs, and employees should also be encouraged to observe a secure password policy.

Goal: Regularly Monitor and Test Networks

Malicious hackers constantly test network systems for holes and vulnerabilities. As such, organizations should monitor and test their cloud networks regularly to identify and mitigate vulnerabilities before malicious actors exploit them. The PCI DSS requirement for this goal is to track and monitor access to network and cardholder data. Cyber experts agree that identifying the cause of a data breach is almost impossible without activity logs of a network system. Network logging mechanisms are vital to effective management of vulnerabilities because they allow your IT teams to track and analyze any occurring incidences.

It’s worth noting that while PCI DSS provides guidelines that should be adhered to, it’s your responsibility to ensure that your cloud service provider complies with these regulations. Therefore, before employing their services, ensure that you ascertain your CSP’s proof of compliance and certification. Ask them what their cloud services entail and how the services are delivered, the status of the cloud service provider in terms of data security, PCI DSS compliance, and other important data security regulations, what your business will be responsible for, and if they will provide ongoing evidence of compliance to all security controls. You should also ask if there are other parties involved in service delivery, support, or data security, and if the service provider can commit to everything in writing.

In conclusion, achieving PCI DSS compliance for your cloud operations is critical to protecting your business from heavy fines, financial losses, damaged reputation, and lawsuits. By following the above guidelines, you can ensure that your cloud operations comply with these regulations and prevent malicious actors from accessing and stealing your customer data.

Conclusion

Achieving PCI DSS compliance in the cloud requires a comprehensive approach that includes risk assessment, policy development, infrastructure security, data protection, regular audits and assessments, and employee education. By following best practices and working closely with their cloud service provider, organizations can maintain a secure and compliant cloud environment that protects against data breaches, financial losses, and legal liabilities.

PCI Compliance FAQs

What is PCI DSS Compliance?

PCI DSS compliance is a set of security standards developed by the payment card industry to ensure that all companies that accept, process, store, or transmit credit card information maintain a secure environment.

Who is responsible for PCI DSS Compliance in the cloud?

In a cloud computing environment, the cloud service provider is responsible for securing the underlying infrastructure, including the physical data centers, servers, and networking components. The customer is responsible for securing their applications, data, and operating systems running on top of the cloud infrastructure.

What are the challenges of achieving PCI DSS Compliance in the cloud?

The challenges of achieving PCI DSS compliance in the cloud include the shared responsibility model between the cloud service provider and the customer, the complexity of the cloud environment, and the lack of visibility and control over the infrastructure.

What are some best practices for achieving PCI DSS Compliance in the cloud?

Best practices for achieving PCI DSS compliance in the cloud include implementing a risk assessment process, developing a cloud security policy, securing cloud infrastructure, protecting data in the cloud, conducting regular audits and assessments, and educating employees on cloud security best practices.

Why is PCI DSS Compliance important?

PCI DSS compliance is important because it helps to prevent data breaches and protects against financial losses, legal liabilities, and damage to the company’s reputation. Failure to comply with PCI DSS standards can result in costly fines and penalties.