How to find the largest Azure Blobs

If you are looking to optimize their storage costs on Azure Blob storage. With the increasing amount of data being stored in the cloud, it’s important to keep track of the size of each Blob and identify which ones are taking up the most storage. This is where the guide comes in – it provides a step-by-step process on how to use Cloud Storage Manager to find the largest Azure Blobs in your environment.

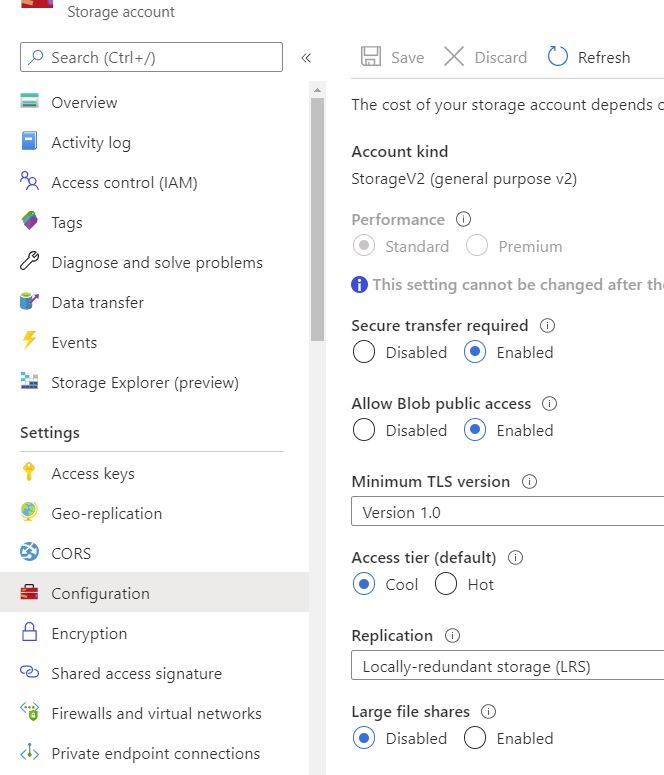

By identifying the largest Blobs, you can take steps to optimize your storage costs, such as deleting unnecessary data or moving data to a more cost-effective storage tier. Whether you’re new to Azure Blob storage or an experienced user, this guide is a helpful resource for optimizing your storage costs.

Recently we had one of our clients inform us that he used Cloud Storage Manager to find which were the largest Blobs in his Azure Storage Containers.

Here is a quick run down to show one of the many reports on your Azure Blob consumption that you can run with Cloud Storage Manager.

Which are my largest Azure Blobs?

See all your Azure Blob Sizes

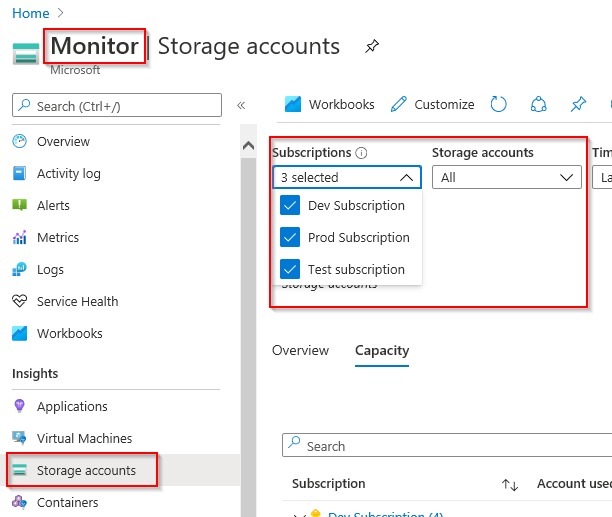

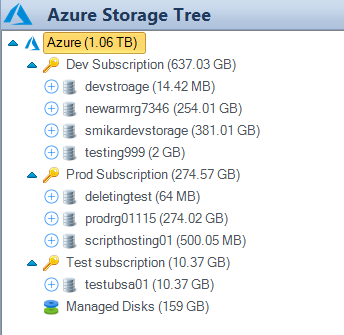

If you want to find out which BLOBs in your environment are the largest, or consuming the most storage, the easiest and simplest method by far is to use Cloud Storage Manager.

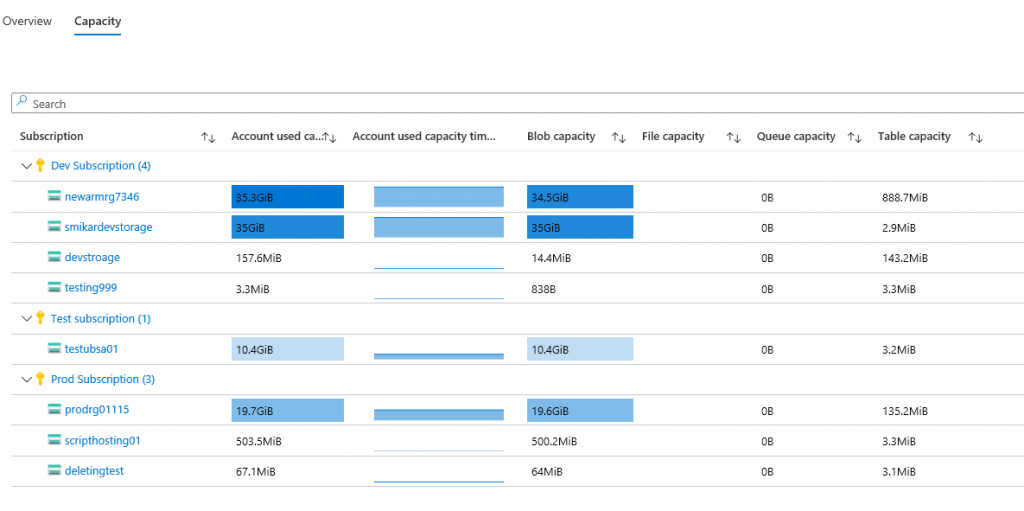

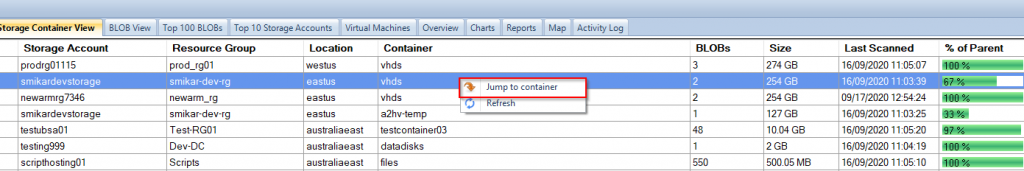

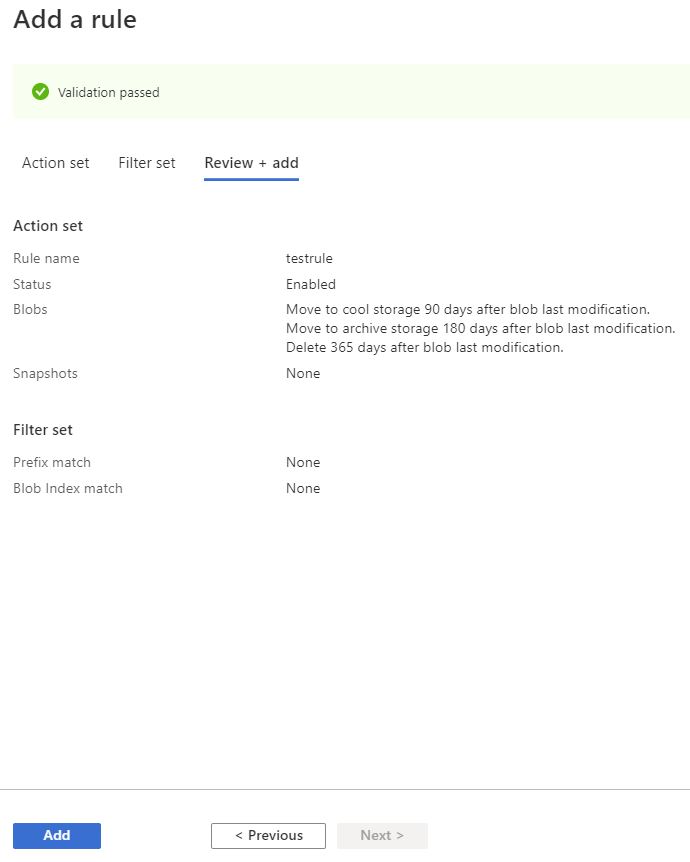

Once you’ve allowed Cloud Storage Manager to scan your environment, you have a few options to get this information.

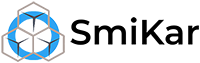

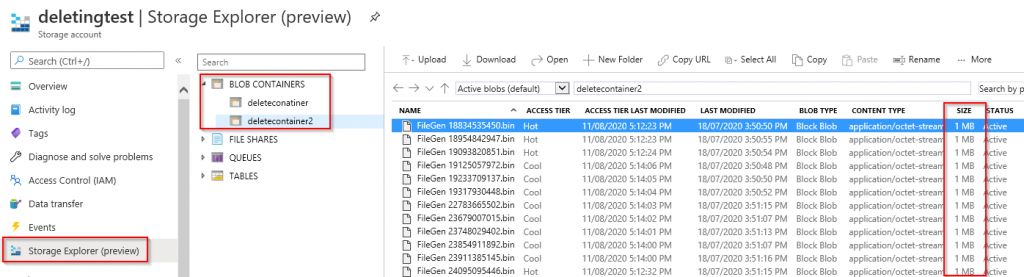

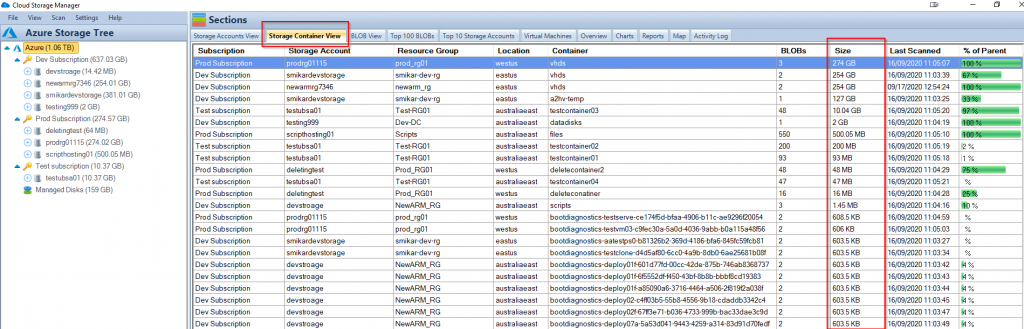

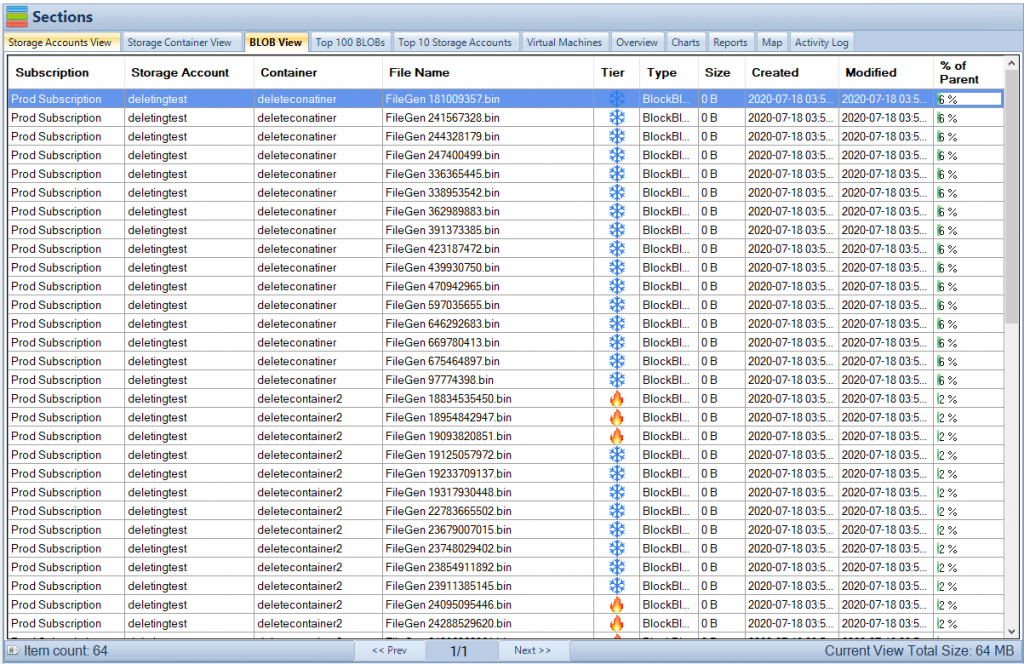

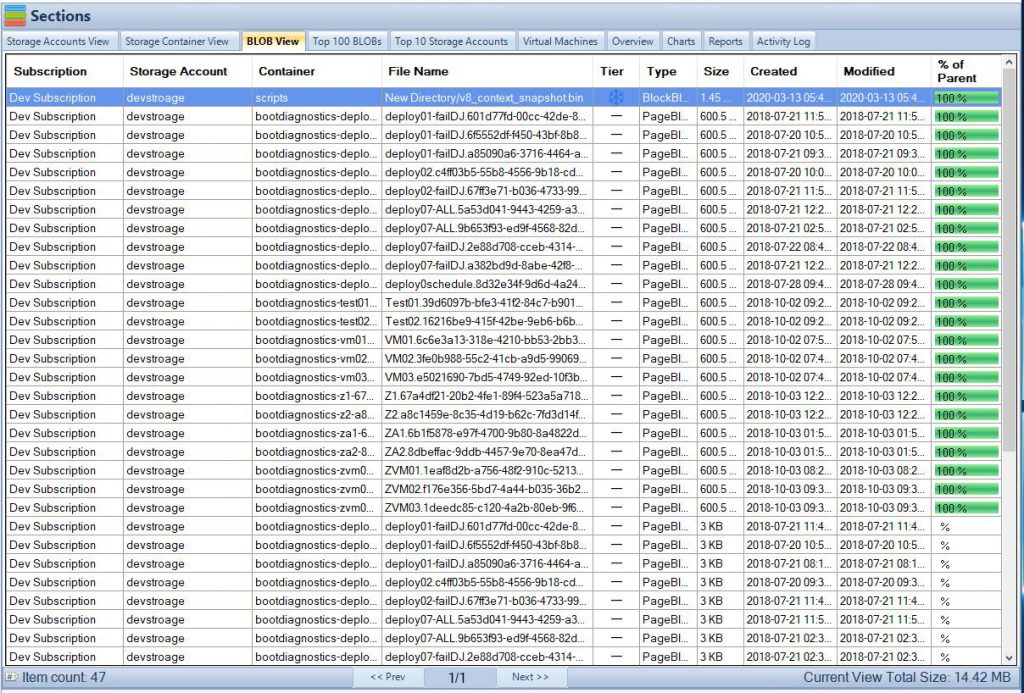

The Top 100 BLOBs tab will give you a list of the top 100 largest BLOBs in your environment. It will also show you which Subscription, Storage Account, Container the BLOB resides in and of course its name. Not only that but it will tell you the object tier, whether that is hot, cool or archive, the size of the Azure BLOB, when it was created and when it was last modified.

Have a look at the screenshot to get a clearer picture.

Your largest Azure Blob Sizes

In the bottom right corner you will also see how much overall storage your largest 100 BLOBs are consuming. In our example, we can see that our largest 100 BLOBs are consuming 102GB. Of course, this is just our lab environment so in a real production environment this could be hundreds of TB or PB!

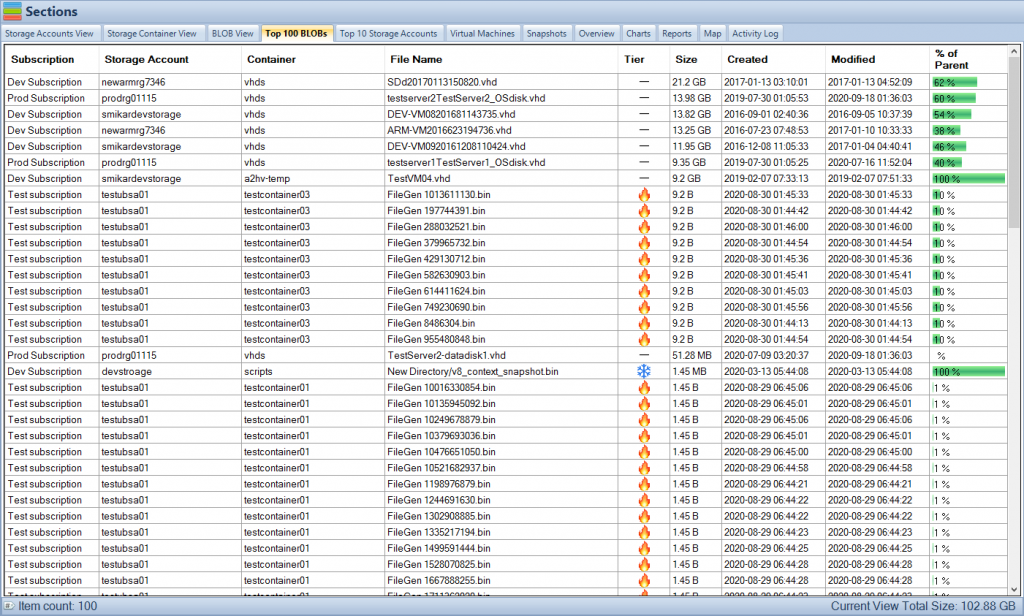

You may also export this data into a spreadsheet by selecting the Reports tab and selecting the “List the top 100 BLOBs” report.

Azure Blob Storage Size Report

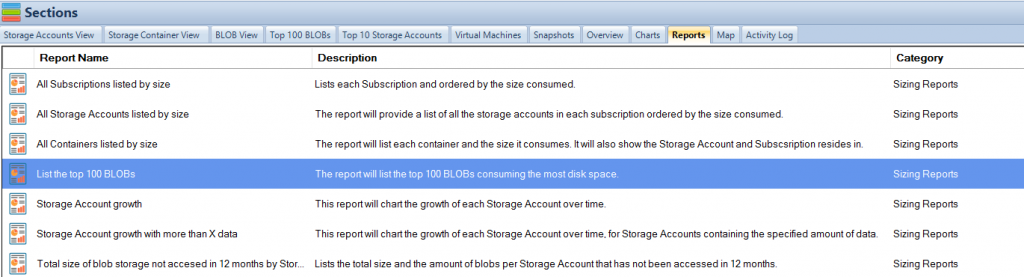

Right click on the report and select “Run Report” to view the data in an exportable table format that you can see in Microsoft Excel.

The export includes all relevant information including the Azure Subscription, Azure Storage Account, the Container the Blob resides in, the name of the Azure Blob itself, what storage tiering the Blob is in, the date created, last modified and finally it’s size.

Free

Maximum Azure Storage limited to 30TB.

Typically for small or personal environments usually consisting of 3 or less Azure Subscriptions and consuming under 30TB of Azure Blob Storage.

Free Forever (until over 30TB)

Advanced

Maximum Azure Storage limited to 1PB

For medium sized environments typically consisting of less than 5 Azure Subscriptions.

12 Month License

Enterprise

Unlimited Azure Storage.

For use in large environments typically consisting of more than 10 Subscriptions and consuming more than 1PB of Azure Blob Storage.

12 Month License

Cloud Storage Manager is licensed based on the size of your Azure Subscriptions, Azure Storage Accounts, Containers and finally each Blob.

Each version has the same great functions including scheduled scans of your Azure Blob Storage and reporting.

Send download link to:

FAQs

What is Azure Blob storage?

Azure Blob storage is a cloud-based storage solution offered by Microsoft Azure. It allows users to store and access large amounts of unstructured data, such as text or binary data, through REST-based object storage.

Why is it important to find the largest Azure Blobs?

Identifying the largest Azure Blobs is important for optimizing storage costs. By understanding which Blobs are taking up the most storage, users can take steps to delete unnecessary data or move data to a more cost-effective storage tier.

How can Cloud Storage Manager help me find the largest Azure Blobs?

Cloud Storage Manager provides a step-by-step process to find the largest Azure Blobs in your environment. It offers a Top 100 BLOBs tab that gives users a list of the top 100 largest BLOBs in their environment, along with information on the Subscription, Storage Account, Container, object tier, size, and more. Users can also export this data into a spreadsheet.

How is Cloud Storage Manager licensed?

Cloud Storage Manager is licensed based on the size of your Azure Subscription. There are three versions of Cloud Storage Manager: Free, Advanced, and Enterprise, each with different limits on database size. All versions offer scheduled scans of Azure Blob Storage and reporting.

Who can benefit from using Cloud Storage Manager?

Cloud Storage Manager is useful for anyone who uses Azure Blob storage and wants to optimize their storage costs. It can be helpful for both new and experienced users of Azure Blob storage.