by Mark | May 12, 2023 | Azure, Azure Blobs, Cloud Storage

Best Practices and Use Cases

Introduction

Are you storing your data in the cloud? If yes, then you must be aware of the various security challenges that come with it.

One of the biggest concerns in cloud computing is securing data from unauthorized access. However, with Azure Storage Private Endpoints, Microsoft has introduced a solution that can help organizations secure their data in the cloud.

Brief overview of Azure Storage Private Endpoints

So what exactly are Azure Storage Private Endpoints? Simply put, private endpoints provide secure access to a specific service over a virtual network. With private endpoints, you can connect to your Azure Storage account from within your virtual network without needing to expose your data over the public internet.

Azure Storage Private Endpoints allows customers to create a private IP address for their storage accounts and map it directly to their virtual networks. This helps customers keep their sensitive data within their network perimeter and enables them to restrict access only to necessary resources.

Importance of securing data in the cloud

Securing data has always been a top priority for any organization. The rise of cloud computing has only increased this concern, as more and more sensitive information is being stored in the cloud.

A single security breach can cause irreparable damage not only to an organization’s reputation but also financially. With traditional methods of securing information proving inadequate for cloud-based environments, new solutions like Azure Storage Private Endpoints have become essential for businesses seeking comprehensive security measures against cyber threats.

We will explore how Azure Storage Private Endpoints offer organizations much-needed protection when storing sensitive information in the public cloud environment. Now let’s dive deeper into what makes these endpoints so valuable and how they work together with Azure Storage accounts.

What are Azure Storage Private Endpoints?

Azure Storage is one of the most popular cloud storage services. However, the public endpoint of Azure Storage is accessible over the internet. Any user who has the connection string can connect to your storage account.

This makes it difficult to secure your data from unauthorized access. To solve this problem, Microsoft introduced a feature called “Private Endpoints” for Azure Storage.

Private endpoints enable you to securely access your storage account over an Azure Virtual Network (VNet). Essentially, you can now create an endpoint for your storage account that is accessible only within a specific VNet.

Definition and explanation of private endpoints

Private endpoints are a type of network interface that enables secure communication between resources within a VNet and Azure services such as Azure Storage. The endpoint provides a private IP address within the specified subnet in your VNet.

When you create a private endpoint for your storage account, it creates a secure tunnel between the VNets where the private endpoint is created and where the storage account resides. This tunnel enables traffic to flow securely between these two locations without exposing any traffic to the public internet.

How they work with Azure Storage

When you create a private endpoint for Azure Storage, requests from resources in the same VNet as the private endpoint automatically route through this new interface instead of using the public internet-facing endpoints. In other words, once you’ve established a connection via Private Endpoint, all traffic between resources on that VNet and your Azure Storage Accounts will stay entirely within that virtual network. One benefit of this approach is increased security because it removes any exposure to attacks on an otherwise publicly available service like accessing data stored in an open container or blob; all connections go directly through an encrypted tunnel maintained by Microsoft itself with no chance for exposure or exploitation by malicious third parties outside or inside customer environments (as long as those environments are properly secured).

Additionally, working with Azure Storage accounts using Private Endpoints is incredibly straightforward and transparent. The process is essentially the same as if you’re connecting to the public endpoints, except your traffic stays on your private network entirely.

Benefits of using Azure Storage Private Endpoints

Improved security and compliance

One of the most significant benefits of using Azure Storage Private Endpoints is improved security and compliance. Traditional storage accounts often rely on access keys or shared access signatures to control access to data, which can be vulnerable to attacks such as phishing or insecure connections. Private endpoints, on the other hand, use a private IP address within a virtual network to establish a secure connection between the storage account and clients.

Additionally, private endpoints allow for granular control over network traffic by allowing only authorized traffic from specific virtual networks or subnets. This level of control significantly reduces the risk of unauthorized access and ensures compliance with industry regulations such as HIPAA or PCI-DSS.

Reduced exposure to public internet

Another major advantage of using Azure Storage Private Endpoints is reduced exposure to the public internet. With traditional storage accounts, data is accessed through a public endpoint that exposes it to potential threats such as DDoS attacks or brute-force attacks on authentication credentials.

By using private endpoints, you can ensure that your data remains within your virtual network and never leaves your organization’s infrastructure. This approach significantly reduces the risks associated with exposing sensitive data to unknown entities on the internet.

Simplified network architecture

Azure Storage Private Endpoints also simplify your organization’s overall network architecture by reducing the need for complex firewall rules or VPN configurations. By allowing you to connect directly from your virtual network, private endpoints provide a more streamlined approach that eliminates many of the complexities associated with traditional networking solutions.

This simplification allows organizations to reduce overhead costs in managing their networking infrastructure while providing enhanced security measures designed specifically for Azure Storage accounts. Additionally, since private endpoints can be deployed across multiple regions around the world without requiring any additional infrastructure configuration, they are an ideal solution for global organizations looking for an efficient and secure way to access their data.

Setting up Azure Storage Private Endpoints

Step-by-step guide on how to create a private endpoint for Azure Storage account

Setting up Azure Storage Private Endpoints is easy and straightforward. To create a private endpoint, you need to have an Azure subscription and an existing virtual network that the private endpoint will be attached to.

To create a private endpoint for an Azure Storage account, follow these steps:

1. Go to the Azure portal and select your storage account

2. Click on “Private endpoints” under settings

3. Click “Add” to create a new private endpoint

4. Select your virtual network and subnet

5. Choose the service you want to connect to (in this case, it would be Blob, File or Queue)

6. Select the storage account you want to connect to

7. Configure the DNS name label

8. Review and click “Create” Once completed, your private endpoint will be created.

Configuring virtual network rules and DNS settings

After creating your private endpoint, you need to configure virtual network rules and DNS settings. To configure virtual network rules:

1. Go back to your storage account in the Azure portal

2. Click on “Firewalls and virtual networks” under security + networking

3. Add or edit existing virtual network rules as needed

Virtual network rules allow traffic from specific IP addresses or ranges of IP addresses within your Virtual Network (VNet) to access the storage service over a specified set of ports.

To configure DNS settings:

1. Navigate back to the Private Endpoint blade in the portal.

2. Find your new storage account endpoint.

3. Copy its FQDN (fully qualified domain name). This will be used in place of traditional endpoints when accessing blobs/files/queues in this particular storage account.

4. Create CNAME records pointing from that FQDN to your actual storage account domain name. DNS settings allow clients within your Virtual Network to resolve the private endpoint’s FQDN to its corresponding private IP address.

Configuring virtual network rules and DNS settings is a crucial part of setting up Azure Storage Private Endpoints. By doing so, you are ensuring that only the necessary traffic can access your storage account privately.

Best practices for managing Azure Storage Private Endpoints

Limiting Access to Only Necessary Resources

When it comes to managing Azure Storage Private Endpoints, the first and most important step is to limit access only to necessary resources. This approach helps reduce the risk of unauthorized access, which can jeopardize the security of your data. As a best practice, you should only grant access permissions to users who need them for their specific tasks.

One effective way to limit access is by using role-based access control (RBAC). RBAC allows you to define roles and assign them specific permissions based on a user’s responsibilities within your organization.

With this approach, you can ensure that users have only the permissions they need and nothing more. Another way to limit access is by implementing network security groups (NSGs) within your virtual network.

NSGs are essentially firewall rules that allow or deny traffic based on IP addresses or port numbers. By creating firewall rules for your Azure Storage Private Endpoint, you can restrict traffic coming in and out of your network.

Monitoring and Logging Activities

The second best practice for managing Azure Storage Private Endpoints is monitoring and logging activities. Monitoring activities includes collecting metrics about resource usage, analyzing logs for suspicious behavior, and setting up alerts when certain conditions are met.

Azure provides several tools that help monitor activities within your storage account, including Azure Monitor and Log Analytics. These tools allow you to track network traffic patterns, monitor system performance in real-time, view logs related storage operations such as reads or writes performed against storage accounts.

Logging activities involves storing detailed information about events within the environment being monitored. Logging is essential in identifying potential security breaches or anomalies in system behavior patterns over time which may go unnoticed otherwise

Regularly Reviewing and Updating Configurations

reviewing configurations regularly will ensure that changes made do not expose the environment to vulnerabilities or noncompliance. Regularly reviewing and updating configurations is crucial for maintaining a secure environment and ensuring compliance with regulations.

It’s important to regularly review all configurations related to your storage account and endpoints, including virtual network rules, DNS settings, firewall rules, and permissions. By doing so, you can identify any misconfigurations that may be putting your organization at risk.

Additionally, it is important to keep up-to-date with the latest security best practices and changes in regulatory requirements which may impact how you configure Azure Storage Private Endpoints. limiting access rights while setting up Azure Storage Private Endpoints as well as monitoring all activities are key steps in keeping data safe from unauthorized users.

Regularly reviewing configurations is also essential for maintaining a secure environment over time. By following these best practices, you can take full advantage of Azure Storage’s powerful capabilities while keeping your data secure in the cloud.

Use Cases for Azure Storage Private Endpoints

Healthcare Industry: Securing Patient Data

The healthcare industry is one of the most heavily regulated industries in the world, with strict guidelines on how patient data can be stored and transmitted. Azure Storage Private Endpoints provide a secure way to store and access this sensitive data.

By creating a private endpoint for their Azure Storage account, healthcare providers can ensure that patient data remains protected from prying eyes. With the use of virtual network rules and DNS settings, healthcare organizations can limit access to only necessary resources, ensuring that patient data is kept confidential.

Additionally, with Azure Security Center, healthcare providers can be alerted to any suspicious activity or potential security threats. By monitoring and logging activities related to their Azure Storage Private Endpoint, healthcare providers can quickly identify and respond to any security issues that may arise.

Financial Industry: Protecting Sensitive Financial Information

The financial industry also deals with highly sensitive information such as financial transactions and personal identification information (PII). With the use of Azure Storage Private Endpoints, financial institutions can ensure that this data is secure while still being easily accessible by authorized personnel. By setting up a private endpoint for their Azure Storage account, financial institutions can reduce their exposure to the public internet and limit access only to those who need it.

This helps prevent unauthorized access or breaches of sensitive information. Azure Security Center also provides advanced threat protection capabilities that help detect, assess, and remediate potential security threats before they become major issues.

Government Agencies: Ensuring Compliance with Regulations

Government agencies also deal with sensitive information such as classified documents or personally identifiable information (PII). These agencies must comply with strict regulations regarding how this information is stored and accessed. With Azure Storage Private Endpoints, government agencies can ensure compliance with these regulations while still having easy access to their data.

By setting up private endpoints for their Azure Storage accounts, agencies can limit access to only authorized personnel and ensure that data remains secure. Azure Security Center also provides compliance assessments and recommendations based on industry standards such as HIPAA and PCI DSS, helping government agencies stay compliant with regulations.

Conclusion

Azure Storage Private Endpoints provide a secure way to access data stored in the cloud. By limiting public internet exposure and implementing private connectivity within your virtual network, you can reduce the risk of unauthorized access to your data.

Additionally, by using private endpoints, you can improve compliance with industry regulations and simplify network architecture. By following best practices for managing Azure Storage Private Endpoints such as regularly monitoring and reviewing configurations, limiting access to only necessary resources, and logging activities, you can ensure that your data remains secure.

Azure Storage Private Endpoints are especially useful in industries such as healthcare, finance and government where security and compliance are paramount. They enable these industries to securely store their sensitive information in the cloud while ensuring that it is only accessible by authorized personnel.

Overall, with Azure Storage Private Endpoints you can rest assured that your data is secure in the cloud. So go ahead and take advantage of this powerful feature to improve security and compliance for your organization!

Azure Storage Unlocked

Please fill out the form below to get our free Ebook "Azure Storage Unlocked" emailed to you

FREE DOWNLOAD

by Mark | Apr 20, 2023 | Azure Blobs, Azure Disks, Azure FIles, Cloud Storage, Storage Accounts

Understanding Blob Storage and Blob-Hunting

What is Blob Storage?

Blob storage is a cloud-based service offered by various cloud providers, designed to store vast amounts of unstructured data such as images, videos, documents, and other types of files. It is highly scalable, cost-effective, and durable, making it an ideal choice for organizations that need to store and manage large data sets for applications like websites, mobile apps, and data analytics. With the increasing reliance on cloud storage solutions, data security and accessibility have become a significant concern. Organizations must prioritize protecting sensitive data from unauthorized access and potential threats to maintain the integrity and security of their storage accounts.

What is Blob-Hunting?

Blob-hunting refers to the unauthorized access and exploitation of blob storage accounts by cybercriminals. These malicious actors use various techniques, including scanning for public-facing storage accounts, exploiting vulnerabilities, and leveraging weak or compromised credentials, to gain unauthorized access to poorly protected storage accounts. Once they have gained access, they may steal sensitive data, alter files, hold the data for ransom, or use their unauthorized access to launch further attacks on the storage account’s associated services or applications. Given the potential risks and damage associated with blob-hunting, it is crucial to protect your storage account to maintain the security and integrity of your data and ensure the continuity of your operations.

Strategies for Protecting Your Storage Account

Implement Strong Authentication

One of the most effective ways to secure your storage account is by implementing strong authentication mechanisms. This includes using multi-factor authentication (MFA), which requires users to provide two or more pieces of evidence (factors) to prove their identity. These factors may include something they know (password), something they have (security token), or something they are (biometrics). By requiring multiple authentication factors, MFA significantly reduces the risk of unauthorized access due to stolen, weak, or compromised passwords.

Additionally, it is essential to choose strong, unique passwords for your storage account and avoid using the same password for multiple accounts. A strong password should be at least 12 characters long and include upper and lower case letters, numbers, and special symbols. Regularly updating your passwords and ensuring that they remain unique can further enhance the security of your storage account. Consider using a password manager to help you securely manage and store your passwords, ensuring that you can easily generate and use strong, unique passwords for all your accounts without having to memorize them.

When it comes to protecting sensitive data in your storage account, it is also important to consider the use of hardware security modules (HSMs) or other secure key management solutions. These technologies can help you securely store and manage cryptographic keys, providing an additional layer of protection against unauthorized access and data breaches.

Limit Access and Assign Appropriate Permissions

Another essential aspect of securing your storage account is limiting access and assigning appropriate permissions to users. This can be achieved through role-based access control (RBAC), which allows you to assign specific permissions to users based on their role in your organization. By using RBAC, you can minimize the risk of unauthorized access by granting users the least privilege necessary to perform their tasks. This means that users only have the access they need to complete their job responsibilities and nothing more.

Regularly reviewing and updating user roles and permissions is essential to ensure they align with their current responsibilities and that no user has excessive access to your storage account. It is also crucial to remove access for users who no longer require it, such as employees who have left the organization or changed roles. Implementing a regular access review process can help you identify and address potential security risks associated with excessive or outdated access permissions.

Furthermore, creating access policies with limited duration and scope can help prevent excessive access to your storage account. When granting temporary access, make sure to set an expiration date to ensure that access is automatically revoked when no longer needed. Additionally, consider implementing network restrictions and firewall rules to limit access to your storage account based on specific IP addresses or ranges. This can help reduce the attack surface and protect your storage account from unauthorized access attempts originating from unknown or untrusted networks.

Encrypt Data at Rest and in Transit

Data encryption is a critical aspect of securing your storage account. Ensuring that your data is encrypted both at rest and in transit makes it more difficult for cybercriminals to access and exploit your sensitive information, even if they manage to gain unauthorized access to your storage account.

Data at rest should be encrypted using server-side encryption, which involves encrypting the data before it is stored on the cloud provider’s servers. This can be achieved using encryption keys managed by the cloud provider or your own encryption keys, depending on your organization’s security requirements and compliance obligations. Implementing client-side encryption, where data is encrypted on the client-side before being uploaded to the storage account, can provide an additional layer of protection, especially for highly sensitive data.

Data in transit, on the other hand, should be encrypted using Secure Sockets Layer (SSL) or Transport Layer Security (TLS), which secures the data as it travels between the client and the server over a network connection. Ensuring that all communication between your applications, services, and storage account is encrypted can help protect your data from eavesdropping, man-in-the-middle attacks, and other potential threats associated with data transmission.

By implementing robust encryption practices, you significantly reduce the risk of unauthorized access to your sensitive data, ensuring that your storage account remains secure and compliant with industry standards and regulations.

Regularly Monitor and Audit Activity

Monitoring and auditing activity in your storage account is essential for detecting and responding to potential security threats. Setting up logging and enabling monitoring tools allows you to track user access, file changes, and other activities within your storage account, providing you with valuable insights into the security and usage of your data.

Regularly reviewing the logs helps you identify any suspicious activity or potential security vulnerabilities, enabling you to take immediate action to mitigate potential risks and maintain a secure storage environment. Additionally, monitoring and auditing activity can also help you optimize your storage account’s performance and cost-effectiveness by identifying unused resources, inefficient data retrieval patterns, and opportunities for data lifecycle management.

Consider integrating your storage account monitoring with a security information and event management (SIEM) system or other centralized logging and monitoring solutions. This can help you correlate events and activities across your entire organization, providing you with a comprehensive view of your security posture and enabling you to detect and respond to potential threats more effectively.

Enable Versioning and Soft Delete

Implementing versioning and soft delete features can help protect your storage account against accidental deletions and modifications, as well as malicious attacks. By enabling versioning, you can maintain multiple versions of your blobs, allowing you to recover previous versions in case of accidental overwrites or deletions. This can be particularly useful for organizations that frequently update their data or collaborate on shared files, ensuring that no critical information is lost due to human error or technical issues.

Soft delete, on the other hand, retains deleted blobs for a specified period, giving you the opportunity to recover them if necessary. This feature can be invaluable in scenarios where data is accidentally deleted or maliciously removed by an attacker, providing you with a safety net to restore your data and maintain the continuity of your operations.

It is important to regularly review and adjust your versioning and soft delete settings to ensure that they align with your organization’s data retention and recovery requirements. This includes setting appropriate retention periods for soft-deleted data and ensuring that versioning is enabled for all critical data sets in your storage account. Additionally, consider implementing a process for regularly reviewing and purging outdated or unnecessary versions and soft-deleted blobs to optimize storage costs and maintain a clean storage environment.

Perform Regular Backups and Disaster Recovery Planning

Having a comprehensive backup strategy and disaster recovery plan in place is essential for protecting your storage account and ensuring the continuity of your operations in case of a security breach, accidental deletion, or other data loss events. Developing a backup strategy involves regularly creating incremental and full backups of your storage account, ensuring that you have multiple copies of your data stored in different locations. This helps you recover your data quickly and effectively in case of an incident, minimizing downtime and potential data loss.

Moreover, regularly testing your disaster recovery plan is critical to ensure its effectiveness and make necessary adjustments as needed. This includes simulating data loss scenarios, verifying the integrity of your backups, and reviewing your recovery procedures to ensure that they are up-to-date and aligned with your organization’s current needs and requirements.

In addition to creating and maintaining backups, implementing cross-region replication or geo-redundant storage can further enhance your storage account’s resilience against data loss events. By replicating your data across multiple geographically distributed regions, you can ensure that your storage account remains accessible and functional even in the event of a regional outage or disaster, allowing you to maintain the continuity of your operations and meet your organization’s recovery objectives.

Implementing Security Best Practices

In addition to the specific strategies mentioned above, implementing general security best practices for your storage account can further enhance its security and resilience against potential threats. These best practices may include:

- Regularly updating software and applying security patches to address known vulnerabilities

- Training your team on security awareness and best practices

- Performing vulnerability assessments and penetration testing to identify and address potential security weaknesses

- Implementing a strong security policy and incident response plan to guide your organization’s response to security incidents and minimize potential damage

- Segmenting your network and implementing network security controls, such as firewalls and intrusion detection/prevention systems, to protect your storage account and associated services from potential threats

- Regularly reviewing and updating your storage account configurations and security settings to ensure they align with industry best practices and your organization’s security requirements

- Implementing a data classification and handling policy to ensure that sensitive data is appropriately protected and managed throughout its lifecycle

- Ensuring that all third-party vendors and service providers that have access to your storage account adhere to your organization’s security requirements and best practices.

Conclusion

Protecting your storage account against blob-hunting is crucial for maintaining the security and integrity of your data and ensuring the continuity of your operations. By implementing strong authentication, limiting access, encrypting data, monitoring activity, and following security best practices, you can significantly reduce the risk of unauthorized access and data breaches. Being proactive in securing your storage account and safeguarding your valuable data from potential threats is essential in today’s increasingly interconnected and digital world.

by Mark | Apr 19, 2023 | Azure Blobs, Blob Storage, Cloud Storage, Storage Accounts

Introduction to Append Blobs

Azure Blob Storage is a highly scalable, reliable, and secure cloud storage service offered by Microsoft Azure. It allows you to store a vast amount of unstructured data, such as text or binary data, in the form of objects or blobs. There are three types of blobs: Block Blobs, Page Blobs, and Append Blobs. In this article, we will focus on Append Blobs, their use cases, management, security, performance, and pricing. Let’s dive in!

Use Cases of Append Blobs

Append Blobs are specially designed for the efficient appending of data to existing blobs. They are optimized for fast, efficient write operations and are ideal for situations where data is added sequentially. Some common use cases for Append Blobs include:

Log Storage

Append Blobs are perfect for storing logs as they allow you to append new log entries without having to read or modify the existing data. This capability makes them an ideal choice for storing diagnostic logs, audit logs, or application logs.

Data Streaming

Real-time data streaming applications, such as IoT devices or telemetry systems, generate continuous streams of data. Append Blobs enable you to collect and store this data efficiently by appending the incoming data to existing blobs without overwriting or locking them.

Big Data Analytics

In big data analytics, you often need to process large volumes of data from various sources. Append Blobs can help store and manage this data efficiently by allowing you to append new data to existing datasets, making it easier to process and analyze.

Creating and Managing Append Blobs

There are several ways to create and manage Append Blobs in Azure. You can use the Azure Portal, Azure Storage Explorer, Azure PowerShell, or tools like AzCopy.

Azure Portal

The Azure Portal provides a graphical interface to create and manage Append Blobs. You can create a new storage account, create a container within that account, and then create an Append Blob within the container. Additionally, you can upload, download, or delete Append Blobs using the Azure Portal.

Azure Storage Explorer

Azure Storage Explorer is a standalone application that allows you to manage your Azure storage resources, including Append Blobs. You can create, upload, download, or delete Append Blobs, and also manage access control and metadata.

Azure PowerShell

Azure PowerShell is a powerful scripting environment that enables you to manage your Azure resources, including Append Blobs, programmatically. You can create, upload, download, or delete Append Blobs, and also manage access control and metadata using PowerShell cmdlets.

Using AzCopy

AzCopy is a command-line utility designed for high-performance uploading, downloading, and copying of data to and from Azure Blob Storage. You can use AzCopy to create, upload, download, or delete Append Blobs efficiently, and it supports advanced features like data transfer resumption and parallel transfers.

Security and Encryption

Securing your Append Blobs is crucial to protect your data from unauthorized access or tampering. Azure provides several security and encryption features to help you safeguard your Append Blobs.

Access Control

To control access to your Append Blobs, you can use Shared Access Signatures, stored access policies, and Azure Active Directory integration. These features allow you to grant granular permissions to your blobs while ensuring that your data remains secure. Learn more about securing Azure Blob Storage here.

Storage Service Encryption

Azure Storage Service Encryption helps protect your data at rest by automatically encrypting your data before storing it in Azure Blob Storage. This encryption ensures that your data remains secure and compliant with various industry standards. Read more about Azure Storage Service Encryption here.

Append Blob Performance

Append Blobs are optimized for fast and efficient write operations. However, understanding how they compare to other blob types and optimizing their performance is essential.

Comparison to Block and Page Blobs

While Append Blobs are optimized for appending data, Block Blobs are designed for handling large files and streaming workloads, and Page Blobs are designed for random read-write operations, like those required by virtual machines. Learn more about the differences between blob types here.

Optimizing Performance

To optimize the performance of your Append Blobs, you can use techniques like parallel uploads, multi-threading, and buffering. These approaches help reduce latency and increase throughput, ensuring that your data is stored and retrieved quickly.

Pricing and Cost Optimization

Understanding the pricing structure for Append Blobs and implementing cost optimization strategies can help you save money on your Azure Storage.

Azure Blob Storage Pricing

Azure Blob Storage pricing depends on factors like storage capacity, data transfer, and redundancy options. To get a better understanding of Azure Blob Storage pricing, visit this page.

Cost-effective Tips

To minimize your Azure Blob Storage costs, you can use strategies like tiering your data, implementing lifecycle management policies, and leveraging Azure Reserved Capacity. For more cost-effective tips, check out this article.

Limitations of Append Blobs

While Append Blobs offer several advantages, they also come with some limitations:

- Append Blobs have a maximum size limit of 195 GB, which may be inadequate for some large-scale applications.

- They are not suitable for random read-write operations, as their design primarily supports appending data.

- Append Blobs do not support tiering, so they cannot be transitioned to different access tiers like hot, cool, or archive.

Best Practices for Using Append Blobs

To make the most of Append Blobs in your Azure storage solution, you should adhere to some best practices.

Use Append Blobs for the Right Use Cases

Append Blobs are best suited for scenarios where data needs to be appended frequently, such as logging and telemetry data collection. Ensure that you use Append Blobs for the appropriate workloads, and consider other blob types like Block and Page Blobs when necessary.

Monitor and Manage Append Blob Size

Given that Append Blobs have a maximum size limit of 195 GB, it’s crucial to monitor and manage their size to prevent data loss or performance issues. Regularly check the size of your Append Blobs and consider splitting them into smaller units or archiving older data as needed.

Optimize Data Access Patterns

Design your data access patterns to take advantage of the strengths of Append Blobs. Focus on sequential write operations and minimize random read-write actions, which Append Blobs are not optimized for.

Leverage Azure Storage SDKs and Tools

Azure provides various SDKs and tools, like the Azure Storage SDKs, Azure Storage Explorer, and AzCopy, to help you manage and interact with your Append Blobs effectively. Utilize these resources to streamline your workflows and optimize performance.

Integrating Append Blobs with Other Azure Services

Append Blobs can be used in conjunction with other Azure services to build powerful, scalable, and secure cloud applications.

Azure Functions

Azure Functions is a serverless compute service that enables you to run code without managing infrastructure. You can use Azure Functions to process data stored in Append Blobs, such as parsing log files or analyzing telemetry data, and react to events in real-time.

Azure Data Factory

Azure Data Factory is a cloud-based data integration service that allows you to create, schedule, and manage data workflows. You can use Azure Data Factory to orchestrate the movement and transformation of data stored in Append Blobs, facilitating data-driven processes and analytics.

Azure Stream Analytics

Azure Stream Analytics is a real-time data stream processing service that enables you to analyze and process data from various sources, including Append Blobs. You can use Azure Stream Analytics to gain insights from your log and telemetry data in real-time and make data-driven decisions.

Advanced Features and Techniques

To further enhance the capabilities of Append Blobs, you can leverage advanced features and techniques to optimize performance, security, and scalability.

Multi-threading

Utilizing multi-threading when working with Append Blobs can significantly improve performance. By using multiple threads to read and write data concurrently, you can reduce latency and increase throughput.

Parallel Uploads

Parallel uploads are another technique to optimize the performance of Append Blobs. By uploading multiple blocks simultaneously, you can decrease the time it takes to upload data and improve overall efficiency.

Buffering

Buffering is a technique used to optimize read and write operations on Append Blobs. By accumulating data in memory before writing it to the blob or reading it from the blob, you can reduce the number of I/O operations and improve performance.

Compression

Compressing data before storing it in Append Blobs can help save storage space and reduce costs. By applying compression algorithms to your data, you can store more information in a smaller space, which can be particularly beneficial for large log files and telemetry data.

Disaster Recovery and Redundancy

Ensuring the availability and durability of your Append Blobs is critical for business continuity and data protection. Azure offers

various redundancy options to safeguard your data against disasters and failures.

Locally Redundant Storage (LRS)

Locally Redundant Storage (LRS) replicates your data three times within a single data center in the same region. This option provides protection against hardware failures but does not protect against regional disasters.

Zone-Redundant Storage (ZRS)

Zone-Redundant Storage (ZRS) replicates your data across three availability zones within the same region. This option offers higher durability compared to LRS, as it provides protection against both hardware failures and disasters that affect a single availability zone.

Geo-Redundant Storage (GRS)

Geo-Redundant Storage (GRS) replicates your data to a secondary region, providing protection against regional disasters. With GRS, your data is stored in six copies, three in the primary region and three in the secondary region.

Read-Access Geo-Redundant Storage (RA-GRS)

Read-Access Geo-Redundant Storage (RA-GRS) is similar to GRS but provides read access to your data in the secondary region. This option is useful when you need to maintain read access to your Append Blob data in the event of a regional disaster.

Migrating Data to and from Append Blobs

There are several methods for migrating data to and from Append Blobs, depending on your specific requirements and infrastructure.

AzCopy

AzCopy is a command-line utility that enables you to copy data to and from Azure Blob Storage, including Append Blobs. AzCopy supports high-performance, parallel transfers and is ideal for migrating large volumes of data.

Azure Data Factory

As mentioned earlier, Azure Data Factory is a cloud-based data integration service that enables you to create, schedule, and manage data workflows. You can use Azure Data Factory to orchestrate the movement of data to and from Append Blobs.

Azure Storage Explorer

Azure Storage Explorer is a free, standalone tool that provides a graphical interface for managing Azure Storage resources, including Append Blobs. You can use Azure Storage Explorer to easily upload, download, and manage your Append Blob data.

REST API and SDKs

Azure provides a REST API and various SDKs for interacting with Azure Storage resources, including Append Blobs. You can use these APIs and SDKs to build custom applications and scripts to migrate data to and from Append Blobs.

FAQs

What are the primary use cases for Append Blobs?

Append Blobs are designed for scenarios where data needs to be appended to an existing blob, such as logging and telemetry data collection.

How do Append Blobs differ from Block and Page Blobs?

Append Blobs are optimized for appending data, Block Blobs are designed for handling large files and streaming workloads, and Page Blobs are designed for random read-write operations, like those required by virtual machines.

What is the maximum size limit for Append Blobs?

Append Blobs have a maximum size limit of 195 GB.

How can I secure my Append Blobs?

You can secure your Append Blobs using access control features like Shared Access Signatures, stored access policies, and Azure Active Directory integration. Additionally, you can use Azure Storage Service Encryption to encrypt your data at rest.

Can I tier my Append Blobs to different access tiers?

No, Append Blobs do not support tiering and cannot be transitioned to different access tiers like hot, cool, or archive.

What Azure services can be integrated with Append Blobs?

Azure Functions, Azure Data Factory, and Azure Stream Analytics are some of the Azure services that can be integrated with Append Blobs.

What redundancy options are available for Append Blobs?

Azure offers redundancy options such as Locally Redundant Storage (LRS), Zone-Redundant Storage (ZRS), Geo-Redundant Storage (GRS), and Read-Access Geo-Redundant Storage (RA-GRS) for Append Blobs.

What tools and methods can I use to migrate data to and from Append Blobs?

Tools and methods for migrating data to and from Append Blobs include AzCopy, Azure Data Factory, Azure StorageExplorer, Cloud Storage Manager and the REST API and SDKs provided by Azure.

Can I use compression to reduce the storage space required for Append Blobs?

Yes, compressing data before storing it in Append Blobs can help save storage space and reduce costs. Applying compression algorithms to your data allows you to store more information in a smaller space, which is particularly useful for large log files and telemetry data.

How can I optimize the performance of my Append Blobs?

You can optimize the performance of your Append Blobs by employing techniques such as multi-threading, parallel uploads, buffering, and compression. Additionally, designing your data access patterns to focus on sequential write operations while minimizing random read-write actions can also improve performance.

Conclusion

Append Blobs in Azure Blob Storage offer a powerful and efficient solution for managing log and telemetry data. By understanding their features, limitations, and best practices, you can effectively utilize Append Blobs to optimize your storage infrastructure. Integrating Append Blobs with other Azure services and leveraging advanced features, redundancy options, and migration techniques will enable you to build scalable, secure, and cost-effective cloud applications.

References

by Mark | Apr 18, 2023 | Azure Blobs, Blob Storage, Cloud Storage, Storage Accounts

Azure Blob storage is a versatile and scalable cloud-based storage solution that allows you to store and manage large amounts of unstructured data. It offers three types of Blobs – Block Blobs, Page Blobs, and Append Blobs – each designed for specific use cases. In this article, we will provide an in-depth exploration of Page Blobs, their features, advantages, use cases, and how you can manage them effectively using Cloud Storage Manager.

What are Page Blobs?

Page Blobs are a type of Azure Blob storage designed to store and manage large, random-access files. They are particularly suited for scenarios where you need to read and write small sections of a file without affecting the entire file. This is in contrast to Block Blobs, which are optimized for streaming large files and storing text or binary data. Page Blobs are organized as a collection of 512-byte pages and can store up to 8 TB of data.

Page Blob Features

Page Blobs offer several unique features, including:

- Random read-write access: Page Blobs provide efficient random read-write access, allowing you to quickly modify specific sections of a file without altering the entire file.

- Snapshots: Page Blobs support snapshot functionality, which enables you to create point-in-time copies of your data for backup or versioning purposes.

- Incremental updates: Page Blobs allow incremental updates, enabling you to modify only the changed portions of a file instead of rewriting the entire file, which can save storage space and improve performance.

- Concurrency control: Page Blobs support optimistic concurrency control, ensuring that multiple users can simultaneously access and modify a file without conflicts or data corruption.

Advantages of Page Blobs

Some of the key advantages of using Page Blobs include:

- Efficient random access: Page Blobs excel at providing efficient random read-write access, making them suitable for use cases like virtual hard disk (VHD) storage and large databases.

- Scalability: Page Blobs can store up to 8 TB of data, offering a scalable solution for storing and managing large files.

- Data protection: Page Blobs support snapshot functionality, providing a means to create point-in-time backups and versioning for your data.

- Optimized performance: With support for incremental updates, Page Blobs can help improve performance by reducing the need to rewrite entire files when only a small section has changed.

- Concurrency control: The optimistic concurrency control feature ensures that multiple users can work on a file simultaneously without conflicts or data corruption.

Use Cases for Page Blobs

Page Blobs are ideal for the following use cases:

- Virtual Hard Disk (VHD) storage: Page Blobs are commonly used to store VHD files for Azure Virtual Machines (VMs) due to their efficient random read-write access capabilities.

- Large databases: Page Blobs are suitable for storing large databases that require random access and frequent updates to small sections of data.

- Backup and versioning: With snapshot functionality, Page Blobs can be used for backup and versioning purposes in applications that require point-in-time data copies.

- Log files: Page Blobs can be used for storing log files that require frequent updates and random access to specific sections.

Comparing Page Blobs and Block Blobs

While both Page Blobs and Block Blobs are used for storing unstructured data, they have different characteristics and are optimized for different use cases:

- Size: Page Blobs can store up to 8 TB of data, while Block Blobs can store up to 4.75 TB.

- Access patterns: Page Blobs provide efficient random read-write access, making them ideal for VHD storage and large databases. In contrast, Block Blobs are optimized for streaming large files and are suitable for storing text or binary data, such as documents, images, and videos.

- Updates: Page Blobs support incremental updates, allowing you to modify only the changed portions of a file. Block Blobs require you to upload the entire file when making modifications.

- Pricing: Page Blobs are generally more expensive than Block Blobs due to their additional features and capabilities.

Pricing

Azure Blob storage pricing depends on factors such as data storage, transactions, and data transfer. For Page Blobs, you’ll be billed based on the total size of the provisioned pages, not the actual data stored. This means that even if you’re only using a portion of the provisioned pages, you’ll still be billed for the entire capacity. To optimize your storage costs, consider using Azure Blob Storage Reserved Capacity or implementing Azure Storage Retention Policies.

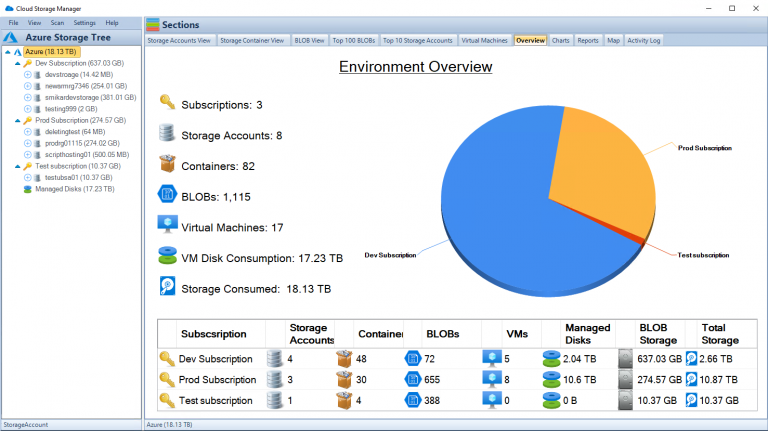

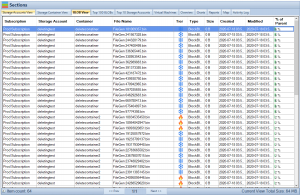

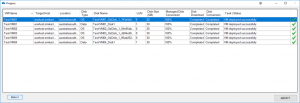

Managing Page Blobs with Cloud Storage Manager

Cloud Storage Manager is a powerful software solution that provides insights into your Azure Blob and File storage consumption. It offers various features to help you manage Page Blobs effectively:

Storage Usage Insights

Cloud Storage Manager provides detailed reports on your storage usage, enabling you to identify trends and optimize your storage consumption.

Growth Trend Reports

With Cloud Storage Manager, you can generate growth trend reports that help you understand how your storage needs are evolving over time. This information can be invaluable for planning and budgeting purposes.

Cost Optimization

Cloud Storage Manager helps you save money on your Azure Storage by providing recommendations on how to optimize your storage usage, such as cost-effective tips for Azure Blob Storage

Securing Page Blobs

Securing your data is critical when using cloud storage services like Azure Blob Storage. To protect your Page Blobs, you should implement the following security best practices:

- Use Azure Active Directory (AD) authentication: Configure Azure AD authentication to control access to your Page Blobs, ensuring that only authorized users and applications can access your data.

- Implement Role-Based Access Control (RBAC): Use RBAC to assign specific permissions to users and groups, limiting their access and actions on your Page Blobs based on their roles and responsibilities.

- Enable encryption: Use Azure Storage Service Encryption (SSE) to encrypt your Page Blobs at rest. This ensures that your data is protected against unauthorized access and disclosure.

- Monitor and audit: Regularly monitor and audit your Page Blob activity using Azure Monitor and Azure Storage Analytics. This helps you identify and respond to potential security threats and maintain compliance with data protection regulations.

Migrating to and from Page Blobs

Migrating data between different types of Blob storage, such as from Block Blobs to Page Blobs or vice versa, requires careful planning and execution. You can use the Azure Data Factory or the AzCopy command-line utility to transfer data between different Blob storage types.

Using Page Blobs with Azure Premium Storage

Azure Premium Storage is a high-performance storage option designed for virtual machine (VM) workloads that require low-latency and high IOPS. Page Blobs stored on Premium Storage can deliver up to 60,000 IOPS and 2,000 MB/s of throughput per disk, making them ideal for hosting VM disks and high-performance databases.

Page Blob Performance Optimization

To optimize the performance of your Page Blobs, consider the following best practices:

- Use Premium Storage: If your workload demands high IOPS and low latency, consider using Page Blobs with Azure Premium Storage.

- Optimize access patterns: Design your application to read and write data in a way that takes advantage of Page Blobs’ efficient random access capabilities.

- Cache frequently accessed data: Use Azure Redis Cache or Azure Content Delivery Network (CDN) to cache frequently accessed data, reducing latency and improving performance.

- Use multiple storage accounts: Distribute your Page Blobs across multiple storage accounts to increase throughput and avoid hitting the IOPS and bandwidth limits of a single account.

Frequently Asked Questions

- What is the maximum size of a Page Blob?Page Blobs can store up to 8 TB of data.

- What is the difference between Page Blobs and Block Blobs?Page Blobs are designed for efficient random read-write access and are suitable for VHD storage and large databases, while Block Blobs are optimized for streaming large files and storing text or binary data such as documents, images, and videos.

- Can I convert a Block Blob to a Page Blob or vice versa?Yes, you can use tools like Azure Data Factory or AzCopy to migrate data between Block Blobs and Page Blobs.

- How can I optimize the performance of my Page Blobs?To optimize Page Blob performance, consider using Premium Storage, optimizing access patterns, caching frequently accessed data, and distributing your Page Blobs across multiple storage accounts.

- What are the best practices for securing Page Blobs?To secure your Page Blobs, use Azure Active Directory authentication, implement Role-Based Access Control, enable encryption using Azure Storage Service Encryption, and regularly monitor and audit your Page Blob activity.

- What is the cost of using Page Blobs?Azure Blob storage pricing depends on factors such as data storage, transactions, and data transfer. For Page Blobs, you’ll be billed based on the total size of the provisioned pages, not the actual data stored.

- How can I manage my Page Blobs effectively?Use a software solution like Cloud Storage Manager to gain insights into your storage usage, generate growth trend reports, and optimize your storage costs.

- What are some common use cases for Page Blobs?Page Blobs are ideal for use cases such as virtual hard disk storage, large databases, backup and versioning, and log file storage.

Conclusion

Page Blobs are a powerful and versatile cloud storage solution that provides efficient random read-write access, making them ideal for storing and managing large files such as virtual hard disks and databases. By understanding the unique features and advantages of Page Blobs, you can make informed decisions about your cloud storage strategy and effectively manage your data using tools like Cloud Storage Manager.

Whether you’re migrating to Page Blobs, optimizing their performance, or securing your data, following best practices will help you get the most out of your Azure Blob Storage investment.

by Mark | Apr 17, 2023 | Azure, Azure Blobs, Blob Storage, Cloud Storage, Storage Accounts

Introduction to Block Blobs

Azure Block Blobs are an essential part of the Microsoft Azure cloud storage platform. They provide a scalable, secure, and cost-effective solution for storing large amounts of unstructured data, such as images, videos, and text files. In this article, we’ll explore the features, benefits, and use cases of Azure Block Blobs, and how our software, Cloud Storage Manager, can help you manage and optimize your Azure Storage consumption.

Understanding Azure Storage Services

Microsoft Azure offers four main storage services:

Blob Storage

Blob Storage is designed for storing unstructured data in a highly scalable and accessible manner. It is suitable for storing large files, such as images, videos, and documents. Azure Block Blobs are a part of this service.

File Storage

File Storage provides fully managed file shares that can be accessed via the SMB protocol. It’s ideal for applications that require a shared file system.

Queue Storage

Queue Storage offers a reliable messaging solution for asynchronous communication between different components of a cloud application.

Table Storage

Table Storage is a NoSQL datastore for storing structured, non-relational data, such as user information or application settings.

Azure Block Blobs: Features and Benefits

Azure Block Blobs come with several advantages:

Scalability and Performance

Block Blobs can scale up to store petabytes of data, with high throughput and low latency for fast data access.

Security and Data Protection

Azure provides built-in encryption, secure access controls, and data redundancy to ensure data protection and compliance.

Cost-Effectiveness

Azure Block Blob Storage offers flexible pricing tiers to match different performance and access requirements, enabling you to optimize costs based on your needs.

Azure Block Blob Storage Structure

Azure Block Blob Storage has a hierarchical structure:

Accounts, Containers, and Blobs

An Azure Storage Account is the top-level container for all your storage resources. Within a storage account, you can create containers, which are logical groupings of block blobs. Each container can hold an unlimited number of blobs.

Block Blob Types: Block Blobs vs. Append Blobs

There are two types of block blobs: Block Blobs and Append Blobs. Block Blobs are optimized for streaming and storing large files, while Append Blobs are designed for scenarios that require frequent additions to existing blobs, such as log files.

Azure Block Blob Storage Structure

Azure Block Blob Storage has a hierarchical structure:

Accounts, Containers, and Blobs

An Azure Storage Account is the top-level container for all your storage resources. Within a storage account, you can create containers, which are logical groupings of block blobs. Each container can hold an unlimited number of blobs.

Block Blob Types: Block Blobs vs. Append Blobs

There are two types of block blobs: Block Blobs and Append Blobs. Block Blobs are optimized for streaming and storing large files, while Append Blobs are designed for scenarios that require frequent additions to existing blobs, such as log files.

Creating and Managing Azure Block Blobs

Using Cloud Storage Manager for Azure Block Blob Management

Our software, Cloud Storage Manager, simplifies the process of creating, managing, and monitoring your Azure Block Blobs. It provides insights into your Azure Blob and File Storage consumption, offers detailed reports on storage usage and growth trends, and helps you save money on your Azure Storage.

Azure Block Blob Use Cases

Azure Block Blobs are versatile and can be used in various scenarios:

Streaming Large Files

Block Blobs are ideal for streaming large files, such as video and audio content, as they support parallel read and write operations, ensuring fast and efficient data access.

Data Backup and Archiving

Azure Block Blobs provide a secure and cost-effective solution for storing backups and archival data, with built-in data redundancy and encryption.

Big Data and Analytics

Block Blobs can store large volumes of unstructured data for big data and analytics workloads, enabling you to analyze and process data at scale.

Content Delivery and Web Applications

Azure Block Blobs can be used as a storage backend for web applications, serving images, videos, and other static content directly to end-users. With Azure Content Delivery Network (CDN) integration, you can improve the performance and availability of your content delivery.

Disaster Recovery and Business Continuity

Azure Block Blobs can be used to store critical data, such as backups and application configurations, ensuring that your data is available in the event of a disaster. Azure provides geo-redundant storage options to maintain multiple copies of your data across different regions for added resiliency.

Comparing Azure Block Blobs with Other Azure Storage Services

Azure offers various storage services to cater to different use cases and requirements. Let’s compare Azure Block Blobs with some of these services:

Azure Block Blobs vs. Azure File Storage

While both Azure Block Blobs and Azure File Storage are designed for storing data, they cater to different use cases. Block Blobs are optimized for storing large unstructured data files, whereas File Storage provides a shared file system for applications that require file-based access.

Azure Block Blobs vs. Azure Queue Storage

Azure Queue Storage is a messaging service that enables asynchronous communication between different components of a cloud application. Block Blobs are not designed for messaging; instead, they’re focused on storing and streaming large data files.

Azure Block Blobs vs. Azure Table Storage

Azure Table Storage is a NoSQL datastore for storing structured, non-relational data. It is designed for storing and querying large amounts of structured data, while Block Blobs are optimized for storing unstructured data files.

Pricing and Cost Optimization for Azure Block Blob Storage

Understanding the pricing tiers and optimizing costs is essential when using Azure Block Blob Storage:

Understanding Pricing Tiers

Azure offers different performance and access tiers for Block Blob Storage, such as Hot, Cool, and Archive tiers. Hot tier is designed for frequently accessed data, while Cool and Archive tiers are for infrequently accessed data with lower storage costs.

Data Lifecycle Management

Azure provides automatic data lifecycle management policies that help you transition data between different access tiers based on usage patterns. This enables you to optimize your storage costs by ensuring that data is stored in the most cost-effective tier.

Saving Money with Cloud Storage Manager

Our Cloud Storage Manager software helps you monitor and optimize your Azure Storage consumption, enabling you to identify inefficiencies and save money on your Azure Storage.

Pricing and Cost Optimization for Azure Block Blob Storage

Understanding the pricing tiers and optimizing costs is essential when using Azure Block Blob Storage:

Understanding Pricing Tiers

Azure offers different performance and access tiers for Block Blob Storage, such as Hot, Cool, and Archive tiers. Hot tier is designed for frequently accessed data, while Cool and Archive tiers are for infrequently accessed data with lower storage costs.

Azure Blob Storage Cost Estimator

Our Azure Blob Storage Cost Estimator allows users to visualize and understand Azure Blob Storage costs and options. By inputting various storage parameters such as storage type, redundancy, access tier, and data transfer, users can estimate their storage costs and explore cost-saving opportunities.

You can use our Azure Storage Estimator below to give you an estimate of your Azure Costs.

The Azure Storage costs provided are for illustration purposes and may not be accurate or up-to-date. Azure Storage pricing can change over time, and actual prices may vary depending on factors like region, redundancy options, and other configurations.

To get the most accurate and up-to-date Azure Storage costs, you should refer to the official Azure Storage pricing page: https://azure.microsoft.com/en-us/pricing/details/storage/

Data Lifecycle Management

Azure provides automatic data lifecycle management policies that help you transition data between different access tiers based on usage patterns. This enables you to optimize your storage costs by ensuring that data is stored in the most cost-effective tier.

Saving Money with Cloud Storage Manager

Our Cloud Storage Manager software helps you monitor and optimize your Azure Storage consumption, enabling you to identify inefficiencies and save money on your Azure Storage.

Integrating Azure Block Blobs with Other Azure Services

Azure Block Blobs can be integrated with various Azure services to enhance their functionality and enable new scenarios:

Azure Functions

You can use Azure Functions to build serverless applications that automatically process data stored in Block Blobs. For example, you can create a function that automatically generates thumbnails for images uploaded to Block Blob Storage.

Azure Machine Learning

Azure Block Blobs can be used to store large datasets for machine learning and AI workloads. With Azure Machine Learning integration, you can access and process data stored in Block Blobs directly within your machine learning workflows.

Azure Data Factory

Azure Data Factory allows you to create data pipelines that ingest, transform, and move data from various sources to different destinations. You can use Block Blobs as both a source and a destination within your data pipelines.

Best Practices for Working with Azure Block Blobs

To get the most out of your Azure Block Blob Storage, consider the following best practices:

Optimizing Data Transfer

For large-scale data transfers, consider using Azure Import/Export Service, Azure Data Box, or AzCopy to efficiently transfer data to and from Azure Block Blob Storage.

Data Partitioning

Organize your data into multiple containers and blobs based on access patterns and performance requirements. This helps you achieve better performance and scalability.

Monitoring and Diagnostics

Enable monitoring and diagnostics for your Azure Storage Account to gain insights into the performance, availability, and usage of your Azure Block Blobs. Use Azure Monitor, Azure Storage Analytics and Cloud Storage Manager to analyze metrics, logs, usage and alerts.

Data Security and Compliance

Use Azure Private Endpoints, firewall rules, and role-based access control to secure access to your Block Blob Storage. Additionally, consider using customer-managed keys for added data encryption control.

Backup and Disaster Recovery

Implement a backup and disaster recovery strategy for your Azure Block Blob data, such as using Azure Backup, creating snapshots, or implementing geo-redundant storage.

Conclusion

Azure Block Blobs offer a scalable, secure, and cost-effective solution for storing large amounts of unstructured data in the cloud. They are suitable for various use cases, from streaming large files to data backup and analytics. With the help of Cloud Storage Manager, you can efficiently manage and optimize your Azure Storage consumption.

FAQs

What are the main differences between Azure Block Blobs and Azure File Storage?

Azure Block Blobs are designed for storing large unstructured data files, while Azure File Storage provides a shared file system for applications that require file-based access.

How can I save money on Azure Block Blob Storage?

You can save money by choosing the right performance and access tier based on your needs, implementing data lifecycle management policies, and using tools like Cloud Storage Manager to monitor and optimize your storage consumption.

How secure is my data stored in Azure Block Blobs?

Azure provides built-in encryption, secure access controls, and data redundancy to ensure data protection and compliance.

What are some common use cases for Azure Block Blobs?

Common use cases include streaming large files, data backup and archiving, big data and analytics, content delivery and web applications, and disaster recovery and business continuity.

How does Cloud Storage Manager help me manage my Azure Block Blobs?

Cloud Storage Manager provides insights into your Azure Blob and File Storage consumption, offers detailed reports on storage usage and growth trends, and helps you save money on your Azure Storage.

by Mark | Apr 17, 2023 | Azure Blobs, Blob Storage, Cloud Storage, Storage Accounts

Introduction to Azure Blob Storage Change Feed

In today’s data-driven world, the ability to monitor and track changes to data is essential for organizations across all industries. Azure Blob Storage Change Feed is a powerful feature that helps you keep tabs on your data by providing a log of all changes made to the blobs within your storage account. This article will guide you through understanding and using Azure Blob Storage Change Feed to effectively manage your data.

The Importance of Data Monitoring

Data monitoring is critical for organizations to maintain data quality, ensure compliance with regulations, and make informed decisions. The ability to track changes in real-time allows for rapid response to potential issues and aids in identifying trends and patterns in data.

Understanding Azure Blob Storage

Azure Blob Storage is a scalable, cost-effective, and secure storage solution offered by Microsoft Azure. It is designed to store and manage large amounts of unstructured data, such as text, images, videos, and log files.

Types of Blob Storage

There are three types of blob storage:

- Block blobs: Optimized for streaming and storing large amounts of data, such as documents, images, and media files.

- Append blobs: Designed for handling log files, where data is added sequentially, and modifications are not allowed.

- Page blobs: Suitable for random read/write operations, such as virtual hard disk (VHD) files used in Azure virtual machines.

What is Change Feed

Change Feed is a feature of Azure Blob Storage that logs all the changes made to the blobs within a storage account. It provides an append-only log of all blob events, allowing you to track modifications and respond accordingly. This feature simplifies data processing and analysis, making it an essential tool for many organizations.

What is Change Feed

Change Feed is a feature of Azure Blob Storage that logs all the changes made to the blobs within a storage account. It provides an append-only log of all blob events, allowing you to track modifications and respond accordingly. This feature simplifies data processing and analysis, making it an essential tool for many organizations.

Setting Up Azure Blob Storage Change Feed

Before you can use Change Feed, you need to set up your Azure Blob Storage account and enable the feature.

Creating a Storage Account

- Log in to your Azure portal.

- Click on “Create a resource

- Search for “Storage account” and click “Create.”

- Fill in the required fields and click “Review + create.”

- Once the validation is passed, click “Create” to deploy the storage account.

Enabling Change Feed for Blob Storage

After creating a storage account, follow these steps to enable Change Feed:

- Navigate to the storage account in the Azure portal.

- Click on “Data management” in the left-hand menu.

- Select “Change Feed.”

- Set the “Status” to “Enabled.”

Configuring Change Feed Retention

You can configure the retention period for your Change Feed data, determining how long the logged events are stored in your account. To configure retention, navigate to the “Change Feed” tab in the storage account and set the desired retention period.

Change Feed Snapshot

Change Feed Snapshot is an optional feature that allows you to create point-in-time snapshots of your Change Feed data. This can be useful for historical analysis and reporting purposes. To enable Change Feed Snapshot, go to the “Change Feed” tab in the storage account and set the “Snapshot” option to “Enabled.”

Accessing and Processing Change Feed Data

There are several Azure services and tools that can be used to access and process Change Feed data, including Azure Functions, Azure Data Factory, Azure Logic Apps, and Azure Storage Explorer.

Azure Functions Integration

Azure Functions provide seamless integration with Change Feed, allowing you to create serverless applications that react to blob events. Popular methods for processing Change Feed data with Azure Functions include Event Grid Triggers and Timer Triggers.

Event Grid Triggers

Event Grid Triggers enable Azure Functions to respond to specific events, such as blob creation or deletion. To set up an Event Grid Trigger, follow these steps:

- Create a new Azure Functions app in the Azure portal.

- Add a new function with an “Event Grid Trigger” template.

- Configure the trigger to listen to the desired blob events.

Timer Triggers

Timer Triggers allow Azure Functions to run on a schedule, making them ideal for processing Change Feed data at regular intervals. To set up a Timer Trigger, follow these steps:

- Create a new Azure Functions app in the Azure portal.

- Add a new function with a “Timer Trigger” template.

- Configure the trigger’s schedule using a CRON expression or a time interval.

Processing Change Feed Using Azure Data Factory

Azure Data Factory is a cloud-based data integration service that allows you to create, schedule, and manage data pipelines. It can be used to process Change Feed data through Copy Data activities and Mapping Data Flows.

Copy Data Activity

The Copy Data activity enables you to copy Change Feed data from one location to another. To process Change Feed data with a Copy Data activity, follow these steps:

- Create a new Azure Data Factory instance in the Azure portal.

- In the Data Factory authoring UI, create a new pipeline.

- Add a new “Copy Data” activity to the pipeline.

- Configure the source dataset to use the “AzureBlobStorage” connector and set the “ChangeFeed” option.

- Configure the destination dataset according to your desired output format and location.

- Publish and trigger the pipeline to start processing the Change Feed data.

Mapping Data Flows

Mapping Data Flows in Azure Data Factory allow you to build complex data transformations using a visual interface. To process Change Feed data with a Mapping Data Flow, follow these steps:

- Create a new Azure Data Factory instance in the Azure portal.

- In the Data Factory authoring UI, create a new pipeline.

- Add a new “Mapping Data Flow” activity to the pipeline.

- Configure the source dataset to use the “AzureBlobStorage” connector and set the “ChangeFeed” option.

- Design the data transformation logic using the visual interface, including aggregations, filters, and joins.

- Configure the destination dataset according to your desired output format and location.

- Publish and trigger the pipeline to start processing the Change Feed data.

Utilizing Azure Logic Apps

Azure Logic Apps is a cloud-based service that allows you to create and run workflows that integrate with various services and data sources. You can use Logic Apps to process Change Feed data by setting up a workflow triggered by blob events. To create a Logic App for processing Change Feed data, follow these steps:

- Create a new Azure Logic App instance in the Azure portal.

- In the Logic App Designer, add a new trigger for the desired blob event, such as “When a blob is added or modified.”

- Add actions to process the Change Feed data, such as sending notifications, updating databases, or calling external APIs.

- Save and enable the Logic App to start processing the Change Feed data.

Azure Storage Explorer

Azure Storage Explorer is a standalone application that enables you to manage and monitor your Azure storage resources, including Change Feed data. With Storage Explorer, you can view, download, and delete Change Feed data directly from your local machine. To use Azure Storage Explorer, download the application from the official website and sign in with your Azure account credentials.

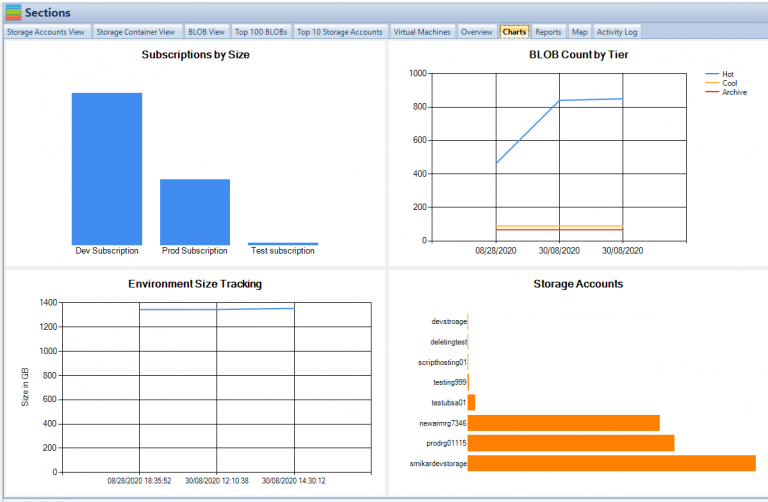

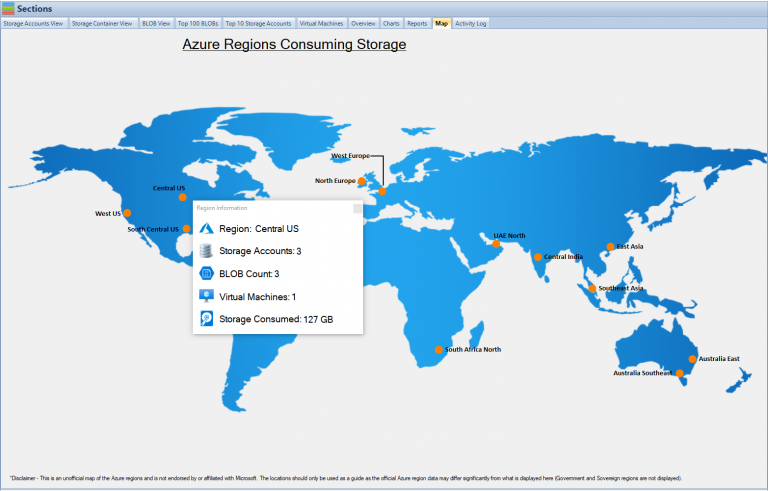

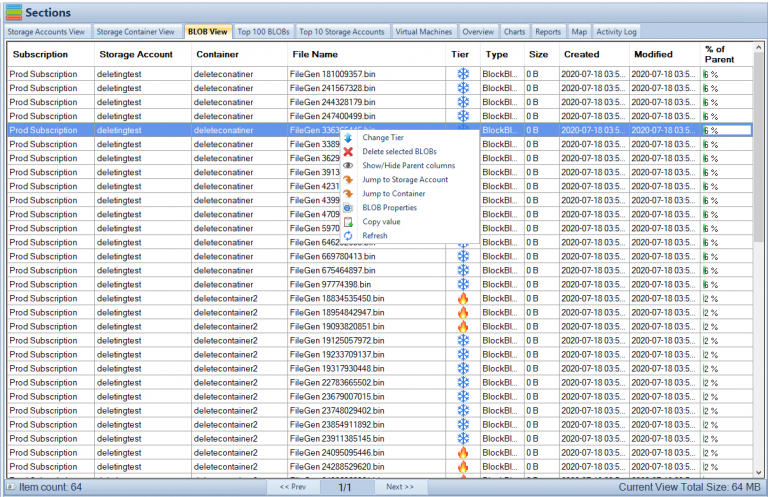

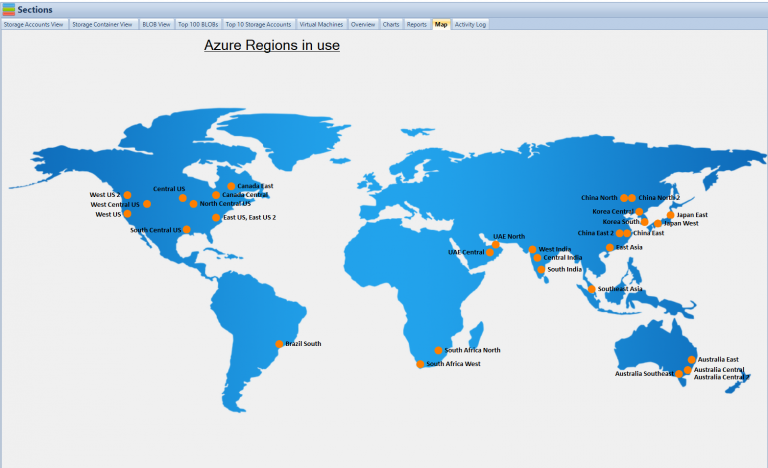

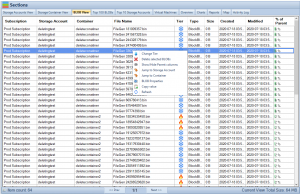

Cloud Storage Manager

Cloud Storage Manager is a tool designed to help organizations manage their Azure Blob and Azure File storage. It provides a map view, tree view, graphs, and reporting capabilities to show storage growth over time and offer insights into storage consumption. Users can search across all Azure Storage Accounts, identify Blobs to move to lower storage tiers to save costs, and perform actions like changing tiering or deleting Blobs within the explorer view. Cloud Storage Manager offers a free version (up to 30TB), an Advanced version (up to 1PB), and an Enterprise version (unlimited storage) based on the size of the organization’s Azure Subscriptions and storage consumption. A free 14-day trial is available.

Real-World Use Cases of Azure Blob Storage Change Feed

Azure Blob Storage Change Feed has numerous practical applications across various industries. Some common use cases include:

Audit and Compliance

Change Feed can be used to maintain a complete audit trail of all changes made to your blob storage. This helps organizations ensure compliance with data protection regulations and internal policies.

Data Processing and Analytics

Change Feed simplifies data processing by providing an organized, chronological log of all blob events. This data can be used for various analytics tasks, such as monitoring data growth, detecting anomalies, and generating insights.

Backup and Disaster Recovery

By tracking changes in real-time, Change Feed can be used to create incremental backups and improve disaster recovery strategies. This allows organizations to minimize data loss and ensure business continuity in the event of an outage or data corruption.

Event Sourcing

Change Feed enables event sourcing patterns by providing a reliable, ordered log of events that can be used to recreate the state of an application or system at any point in time.

Data Archiving and Migration

Change Feed data can be used to implement data archiving and migration strategies by providing an accurate record of all blob modifications, deletions, and additions, facilitating the transfer of data between storage accounts or locations.

Best Practices for Using Azure Blob Storage Change Feed

To make the most of Azure Blob Storage Change Feed, it’s essential to follow best practices for efficient data processing, monitoring, and security.

Efficient Data Processing

When processing Change Feed data, it’s crucial to use the right Azure services and tools that meet your specific needs. Evaluate the capabilities of Azure Functions, Azure Data Factory, Azure Logic Apps, and Azure Storage Explorer to determine the most suitable solution for your data processing requirements.

Monitoring and Alerting