Microsoft Purview: A Complete Overview

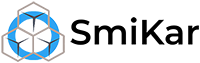

What is Microsoft Purview?

Microsoft Purview is a unified data governance, risk, and compliance solution designed to help organizations manage, protect, and gain insights into their data across on-premises, multi-cloud, and SaaS environments.

It enables businesses to discover, classify, and secure sensitive information while ensuring compliance with regulations such as GDPR, HIPAA, and ISO 27001. By integrating data cataloging, lifecycle management, access controls, and auditing capabilities, Purview provides a comprehensive approach to data protection and regulatory compliance.

With Microsoft Purview, organizations can monitor data usage, prevent data loss, and enforce policies to mitigate security risks. It integrates seamlessly with Microsoft 365, Azure, Power BI, and third-party platforms, offering a centralized way to govern structured and unstructured data. Whether for data discovery, risk management, or compliance reporting, Microsoft Purview empowers businesses to maintain control over their data while supporting innovation and operational efficiency.

Evolution of Microsoft Purview

Previously, Microsoft offered separate tools for compliance and governance, such as Azure Purview and Microsoft Compliance Center. In April 2022, these tools were rebranded and combined into Microsoft Purview, providing a unified experience for data security, classification, and lifecycle management (Microsoft’s official announcement).

Key Takeaways

| Key Aspect | Summary |

| Unified Platform | Combines data governance, compliance, and risk management into a single solution. |

| Data Discovery & Protection | Uses AI-powered classification, labeling, and access control to safeguard sensitive data. |

| Regulatory Compliance | Helps organizations comply with GDPR, HIPAA, CCPA, and other industry regulations. |

| Multi-Cloud Support | Works across Microsoft 365, Azure, AWS, Google Cloud, and on-premises environments. |

| Risk & Threat Management | Detects insider threats, prevents data loss, and enables compliance auditing. |

What Can Microsoft Purview Do?

1. Data Governance & Discovery

- Microsoft Purview Data Map: Provides automated data discovery, classification, and lineage tracking.

- Data Catalog: Allows users to search for and understand data assets across an enterprise.

- Sensitive Data Classification: Automatically detects and labels sensitive data using AI-powered classifiers.

2. Data Protection & Compliance

- Information Protection: Applies encryption and access control to sensitive files.

- Data Loss Prevention (DLP): Helps prevent accidental or unauthorized sharing of sensitive data.

- Compliance Manager: Assists organizations in assessing compliance with regulations such as GDPR, HIPAA, and CCPA (Microsoft Purview Compliance Overview).

3. Risk Management & Insider Threat Protection

- Microsoft Purview Insider Risk Management: Detects and mitigates risks from within an organization.

- Communication Compliance: Monitors internal communications for policy violations and potential risks.

- eDiscovery & Audit: Enables legal teams to identify, hold, and review data for investigations and compliance audits.

4. Integration with Multi-Cloud & On-Premises Environments

- Microsoft 365 & Azure Integration: Provides seamless governance across Microsoft cloud services.

- Support for AWS, Google Cloud, and On-Premises Data Sources: Helps organizations manage data across multiple environments (Microsoft Purview Documentation).

Why Organizations Should Use Microsoft Purview

1. Unified Data Governance

Microsoft Purview consolidates governance, security, and compliance into a single platform, reducing the need for multiple standalone tools.

2. Automated Compliance & Risk Assessment

Organizations can streamline compliance processes and reduce regulatory risks through automated compliance tracking and AI-powered risk detection.

3. Enhanced Security & Protection

With built-in encryption, access control, and insider risk management, businesses can minimize data leaks and cyber threats.

4. Improved Data Visibility & Discovery

By using Purview Data Map and Catalog, organizations can gain better insights into their data landscape, enabling better decision-making and compliance reporting.

How to Get Started with Microsoft Purview

Step 1: Assess Your Organization’s Needs

Before deploying Purview, evaluate your data governance, compliance, and risk management requirements.

Step 2: Enable Microsoft Purview in Your Environment

-

Microsoft Purview is available through Microsoft 365 Compliance Center and Azure Portal.

-

IT administrators can configure Purview’s governance policies based on organizational needs.

Step 3: Classify and Label Data

Use Microsoft’s built-in sensitivity labels to classify and protect your most valuable data assets.

Step 4: Implement Data Protection & Compliance Policies

Leverage Data Loss Prevention (DLP), Insider Risk Management, and Compliance Manager to automate policy enforcement and monitoring.

Step 5: Monitor & Optimize Governance Strategies

Regularly review compliance dashboards and audit logs to identify gaps and improve governance policies.

Is Microsoft Purview Worth It?

For organizations seeking a centralized, AI-powered compliance and data governance solution, Microsoft Purview is one of the most comprehensive and scalable platforms available today. Whether you need to protect sensitive data, ensure regulatory compliance, or manage insider risks, Microsoft Purview provides the tools necessary to meet these challenges effectively.

By implementing Microsoft Purview, businesses can enhance data security, simplify governance, and reduce compliance risks—all within a unified and intelligent platform.

Frequently Asked Questions about Microsoft Purview

1. What is Microsoft Purview used for?

Microsoft Purview is used for data governance, compliance, and risk management across multi-cloud, SaaS, and on-premises environments.

2. How does Microsoft Purview help with compliance?

It provides automated compliance assessments, built-in regulatory templates, and tools like Data Loss Prevention (DLP) and Compliance Manager.

3. Can Microsoft Purview work with non-Microsoft platforms?

Yes, it supports data management across AWS, Google Cloud, and on-premises environments.

4. Does Microsoft Purview protect against insider threats?

Yes, it has Insider Risk Management features that detect and mitigate internal security threats.

5. Is Microsoft Purview included in Microsoft 365?

Some Purview features are included in Microsoft 365 E5 plans, while others may require additional licensing.

6. What industries benefit the most from Microsoft Purview?

Industries like finance, healthcare, and government benefit significantly due to strict regulatory requirements.

7. Can Microsoft Purview automate data classification?

Yes, it uses AI-driven classifiers to automatically detect and label sensitive data.

8. What’s the difference between Microsoft Purview and Azure Purview?

Azure Purview was rebranded and integrated into Microsoft Purview, expanding its capabilities to include compliance and risk management.

9. How does Purview integrate with Microsoft 365?

It integrates natively, allowing organizations to apply governance policies to SharePoint, OneDrive, Exchange, and Teams.

10. Where can I learn more about Microsoft Purview?

You can refer to Microsoft’s official documentation for detailed insights.